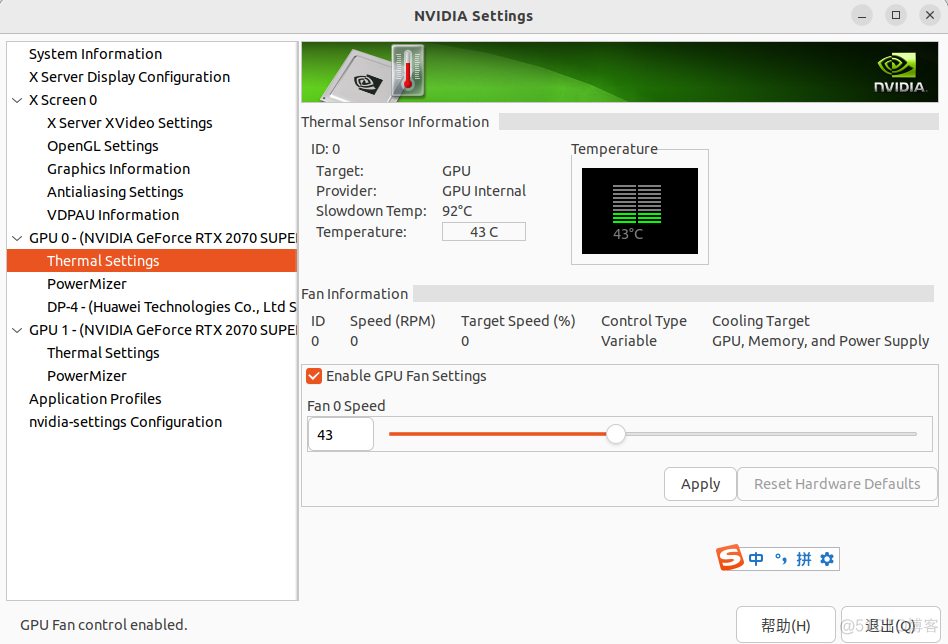

有显示器(桌面版)

默认情况下是可以调节的,神奇的是如果使用下面给出的命令调节的操作后就不能再进行可视化的手动调节了。

=========================================

无显示器(服务器版)

使用工具coolgus进行调节:

https://github.com/andyljones/coolgpus

这个工具其实就是一个python文件,通过调用nvidia-setting中的设置命令来对显卡风扇转速进行调节:

#!/usr/bin/python

import os

import re

import time

import argparse

from subprocess import TimeoutExpired, check_output, Popen, PIPE, STDOUT

from tempfile import mkdtemp

from contextlib import contextmanager

parser = argparse.ArgumentParser(description=r'''

GPU fan control for Linux.

By default, this uses a clamped linear fan curve, going from 30% below 55C to 99%

above 80C. There's also a small hysteresis gap, because _changes_ in fan noise

are a lot more distracting than steady fan noise.

I can't claim it's optimal, but it Works For My Machine (TM). Full load is about

75C and 80%.

''')

parser.add_argument('--temp', nargs='+', default=[55, 80], type=float, help='The temperature ranges where the fan speed will increase linearly')

parser.add_argument('--speed', nargs='+', default=[30, 99], type=float, help='The fan speed ranges')

parser.add_argument('--hyst', nargs='?', default=2, type=float, help='The hysteresis gap. Large gaps will reduce how often the fan speed is changed, but might mean the fan runs faster than necessary')

parser.add_argument('--kill', action='store_true', default=False, help='Whether to kill existing Xorg sessions')

parser.add_argument('--verbose', action='store_true', default=False, help='Whether to print extra debugging information')

parser.add_argument('--debug', action='store_true', default=False, help='Whether to only start the Xorg subprocesses, and not actually alter the fan speed. This can be useful for debugging.')

args = parser.parse_args()

T_HYST = args.hyst

assert len(args.temp) == len(args.speed), 'temp and speed should have the same length'

assert len(args.temp) >= 2, 'Please use at least two points for temp'

assert len(args.speed) >= 2, 'Please use at least two points for speed'

# EDID for an arbitrary display

EDID = b'\x00\xff\xff\xff\xff\xff\xff\x00\x10\xac\x15\xf0LTA5.\x13\x01\x03\x804 x\xee\x1e\xc5\xaeO4\xb1&\x0ePT\xa5K\x00\x81\x80\xa9@\xd1\x00qO\x01\x01\x01\x01\x01\x01\x01\x01(<\x80\xa0p\xb0#@0 6\x00\x06D!\x00\x00\x1a\x00\x00\x00\xff\x00C592M9B95ATL\n\x00\x00\x00\xfc\x00DELL U2410\n \x00\x00\x00\xfd\x008L\x1eQ\x11\x00\n \x00\x1d'

# X conf for a single screen server with fake CRT attached

XORG_CONF = """Section "ServerLayout"

Identifier "Layout0"

Screen 0 "Screen0" 0 0

EndSection

Section "Screen"

Identifier "Screen0"

Device "VideoCard0"

Monitor "Monitor0"

DefaultDepth 8

Option "UseDisplayDevice" "DFP-0"

Option "ConnectedMonitor" "DFP-0"

Option "CustomEDID" "DFP-0:{edid}"

Option "Coolbits" "20"

SubSection "Display"

Depth 8

Modes "160x200"

EndSubSection

EndSection

Section "ServerFlags"

Option "AllowEmptyInput" "on"

Option "Xinerama" "off"

Option "SELinux" "off"

EndSection

Section "Device"

Identifier "Videocard0"

Driver "nvidia"

Screen 0

Option "UseDisplayDevice" "DFP-0"

Option "ConnectedMonitor" "DFP-0"

Option "CustomEDID" "DFP-0:{edid}"

Option "Coolbits" "29"

BusID "PCI:{bus}"

EndSection

Section "Monitor"

Identifier "Monitor0"

Vendorname "Dummy Display"

Modelname "160x200"

#Modelname "1024x768"

EndSection

"""

def log_output(command, ok=(0,)):

output = []

if args.verbose:

print('Command launched: ' + ' '.join(command))

p = Popen(command, stdout=PIPE, stderr=STDOUT)

try:

p.wait(60)

for line in p.stdout:

output.append(line.decode().strip())

if args.verbose:

print(line.decode().strip())

if args.verbose:

print('Command finished')

except TimeoutExpired:

print('Command timed out: ' + ' '.join(command))

raise

finally:

if p.returncode not in ok:

print('\n'.join(output))

raise ValueError('Command crashed with return code ' + str(p.returncode) + ': ' + ' '.join(command))

return '\n'.join(output)

def decimalize(bus):

"""Converts a bus ID to an xconf-friendly format by dropping the domain and converting each hex component to

decimal"""

return ':'.join([str(int('0x' + p, 16)) for p in re.split('[:.]', bus[9:])])

def gpu_buses():

return log_output(['nvidia-smi', '--format=csv,noheader', '--query-gpu=pci.bus_id']).splitlines()

def query(bus, field):

[line] = log_output(['nvidia-smi', '--format=csv,noheader', '--query-gpu='+field, '-i', bus]).splitlines()

return line

def temperature(bus):

return int(query(bus, 'temperature.gpu'))

def config(bus):

"""Writes out the X server config for a GPU to a temporary directory"""

tempdir = mkdtemp(prefix='cool-gpu-' + bus)

edid = os.path.join(tempdir, 'edid.bin')

conf = os.path.join(tempdir, 'xorg.conf')

with open(edid, 'wb') as e, open(conf, 'w') as c:

e.write(EDID)

c.write(XORG_CONF.format(edid=edid, bus=decimalize(bus)))

return conf

def xserver(display, bus):

"""Starts the X server for a GPU under a certain display ID"""

conf = config(bus)

xorgargs = ['Xorg', display, '-once', '-config', conf]

print('Starting xserver: '+' '.join(xorgargs))

p = Popen(xorgargs)

if args.verbose:

print('Started xserver')

return p

def xserver_pids():

return list(map(int, log_output(['pgrep', 'Xorg'], ok=(0, 1)).splitlines()))

def kill_xservers():

"""If there are already X servers attach to the GPUs, they'll stop us from setting up our own. Right now we

can't make use of existing X servers for the reasons detailed here https://github.com/andyljones/coolgpus/issues/1

"""

servers = xserver_pids()

if servers:

if args.kill:

print('Killing all running X servers, including ' + ", ".join(map(str, servers)))

log_output(['pkill', 'Xorg'], ok=(0, 1))

for _ in range(10):

if xserver_pids():

print('Awaiting X server shutdown')

time.sleep(1)

else:

print('All X servers killed')

return

raise IOError('Failed to kill existing X servers. Try killing them yourself before running this script')

else:

raise IOError('There are already X servers active. Either run the script with the `--kill` switch, or kill them yourself first')

else:

print('No existing X servers, we\'re good to go')

return

@contextmanager

def xservers(buses):

"""A context manager for launching an X server for each GPU in a list. Yields the mapping from bus ID to

display ID, and cleans up the X servers on exit."""

kill_xservers()

displays, servers = {}, {}

try:

for d, bus in enumerate(buses):

displays[bus] = ':' + str(d)

servers[bus] = xserver(displays[bus], bus)

yield displays

finally:

for bus, server in servers.items():

print('Terminating xserver for display ' + displays[bus])

server.terminate()

def determine_segment(t):

'''Determines which piece (segment) of a user-specified piece-wise function

t belongs to. For example:

args.temp = [30, 50, 70, 90]

(segment 0) 30 (0 segment) 50 (1 segment) 70 (2 segment) 90 (segment 2)

args.speed = [10, 30, 50, 75]

(segment 0) 10 (0 segment) 30 (1 segment) 50 (2 segment) 75 (segment 2)'''

# TODO: assert temps and speeds are sorted

# the loop exits when:

# a) t is less than the min temp (returns: segment 0)

# b) t belongs to a segment (returns: the segment)

# c) t is higher than the max temp (return: the last segment)

segments = zip(

args.temp[:-1], args.temp[1:],

args.speed[:-1], args.speed[1:])

for temp_a, temp_b, speed_a, speed_b in segments:

if t < temp_a:

break

if temp_a <= t < temp_b:

break

return temp_a, temp_b, speed_a, speed_b

def min_speed(t):

temp_a, temp_b, speed_a, speed_b = determine_segment(t)

load = (t - temp_a)/float(temp_b - temp_a)

return int(min(max(speed_a + (speed_b - speed_a)*load, speed_a), speed_b))

def max_speed(t):

return min_speed(t + T_HYST)

def target_speed(s, t):

l, u = min_speed(t), max_speed(t)

return min(max(s, l), u), l, u

def assign(display, command):

# Our duct-taped-together xorg.conf leads to some innocent - but voluminous - warning messages about

# failing to authenticate. Here we dispose of them

log_output(['nvidia-settings', '-a', command, '-c', display])

def set_speed(display, target):

assign(display, '[gpu:0]/GPUFanControlState=1')

assign(display, '[fan:0]/GPUTargetFanSpeed='+str(int(target)))

def manage_fans(displays):

"""Launches an X server for each GPU, then continually loops over the GPU fans to set their speeds according

to the GPU temperature. When interrupted, it releases the fan control back to the driver and shuts down the

X servers"""

try:

speeds = {b: 0 for b in displays}

while True:

for bus, display in displays.items():

temp = temperature(bus)

s, l, u = target_speed(speeds[bus], temp)

if s != speeds[bus]:

print('GPU {}, {}C -> [{}%-{}%]. Setting speed to {}%'.format(display, temp, l, u, s))

set_speed(display, s)

speeds[bus] = s

else:

print('GPU {}, {}C -> [{}%-{}%]. Leaving speed at {}%'.format(display, temp, l, u, s))

time.sleep(5)

finally:

for bus, display in displays.items():

assign(display, '[gpu:0]/GPUFanControlState=0')

print('Released fan speed control for GPU at '+display)

def debug_loop(displays):

displays = '\n'.join(str(d) + ' - ' + str(b) for b, d in displays.items())

print('\n\n\nLOOPING IN DEBUG MODE. DISPLAYS ARE:\n' + displays + '\n\n\n')

while True:

print('Looping in debug mode')

time.sleep(5)

def run():

buses = gpu_buses()

with xservers(buses) as displays:

if args.debug:

debug_loop(displays)

else:

manage_fans(displays)

if __name__ == '__main__':

run()

需要注意的是这个代码默认是在服务器端运行的,也就是没有Xorg程序在运行,如果是桌面版的系统需要kill掉X11才能成功执行,比如ubuntu22.04 Desktop版系统就需要执行 sudo service gdm3 stop来关闭桌面从而关闭X11。

---------------------------------------------------

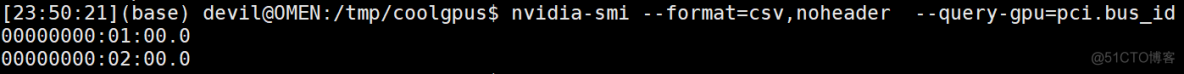

通过这段代码我们可以知道几个知识点:

查询主板上nvidia显卡的总线号:

nvidia-smi --format=csv,noheader --query-gpu=pci.bus_id

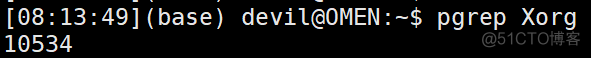

查询现在系统正在运行的X11程序的进程号:

pgrep Xorg

X11程序启动需要读取xorg.conf文件,默认的nvidia显卡启动的X11程序读取文件位置为 /etc/X11/xorg.conf

网上有很多命令设置nvidia显卡风扇转速的教程,但是普遍反应一般,很多人反应不好用,后来发现这些教程都是针对单显卡的情况,而对于多显卡电脑则不适用。原因在于nvidia官方的风扇转速调节命令只对显卡有显示任务的情况下才有效,也就是说如果一个多卡的电脑要使用nvidia官方命令调风扇转速就必须要每块显卡都进行显示输出,也就是说每块显卡都需要运行X11程序进行显示输出,这样才可以对该显卡进行风扇调速。而大家所知道的,多卡电脑也只是一个显卡运行显示任务,而其他显卡是不运行显示任务的,也正因如此网上的nvidia-smi命令调风扇转速对于多卡电脑是无效的,各种报错,也正因如此上面的这个代码给出了一个另类的解决方法,那就是把原有的X11进程kill掉,然后针对每一款显卡都模拟出一个屏幕显示配置文件并以此启动一个X11进程。不过需要注意的是,这种給每个显卡都模拟一个屏幕然后运行X11的方法需要把之前运行的真实的、可以显示输出的X11进程关掉,这样也就导致真实的屏幕是没有显示输出了(黑屏掉)。

由于ubuntu的Desktop版本系统桌面进程会监控X11的运行情况,如果发现X11进程被kill掉那么桌面进程则会重启一个X11进程,最后导致永远管不到真实X11进程,这里给出ubuntu22.04系统下的解决方法,那就是kill掉桌面进程,sudo service gdm3 stop,然后再根据pgrep Xorg获得真实X11进程,然后kill掉。

在单显卡电脑上使用nvidia命令调节显卡风扇转速:

开启手动设置模式:

nvidia-settings -a [gpu:0]/GPUFanControlState=1 -c :0

设置转速50%:

nvidia-settings -a [fan:0]/GPUTargetFanSpeed=50 -c :0

关闭手动设置模式,恢复自动模式:

nvidia-settings -a [gpu:0]/GPUFanControlState=0 -c :0

其中,由于该命令只对单显卡情况有效,因此 [gpu:0] 和 [fan:0] 是固定的,-c :0 指的是屏幕编号,由于是单卡单屏幕因此这里也是固定不变的。

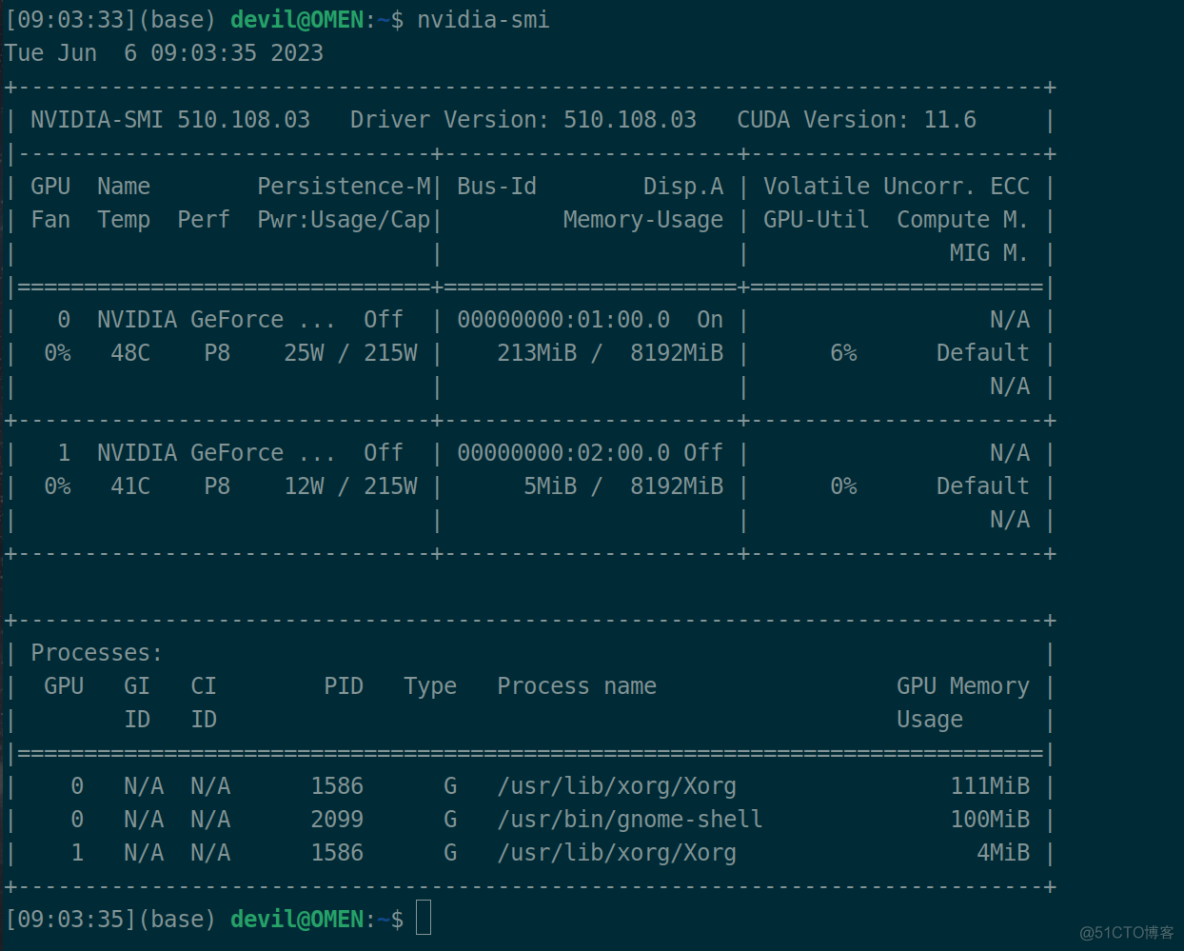

PS: 实践证明,如果电脑有两个显卡,每个显卡各接一个屏幕,依旧只有一个X11进程:

可以看到上面虽然有两个Xorg,其实是同一个CPU进程在不同显卡上的占用情况,pid为1586,其中0号卡为显示输出,显存占用111Mb,1号卡不进行显示输出,显存占用4Mb

结合上面的分析可以知道,目前能够使用官方命令手动控制显卡风扇转速的也只有这种模拟的方法了,该种方法最不好的缺点就是要kill掉真实的X11,导致无法进行桌面显示输出,或许对于多卡电脑使用手动调节显卡转速都是一些计算场景吧,那样的话也就不需要考虑这个问题了。

====================================

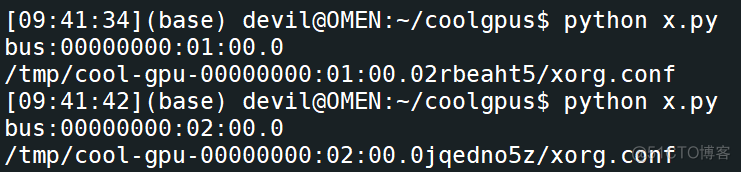

根据上面的代码给出一个固定转速的操作,这里假设固定转速为50%:

nvidia-smi --format=csv,noheader --query-gpu=pci.bus_id

00000000:01:00.0

00000000:02:00.0

文件 x.py

# EDID for an arbitrary display

EDID = b'\x00\xff\xff\xff\xff\xff\xff\x00\x10\xac\x15\xf0LTA5.\x13\x01\x03\x804 x\xee\x1e\xc5\xaeO4\xb1&\x0ePT\xa5K\x00\x81\x80\xa9@\xd1\x00qO\x01\x01\x01\x01\x01\x01\x01\x01(<\x80\xa0p\xb0#@0 6\x00\x06D!\x00\x00\x1a\x00\x00\x00\xff\x00C592M9B95ATL\n\x00\x00\x00\xfc\x00DELL U2410\n \x00\x00\x00\xfd\x008L\x1eQ\x11\x00\n \x00\x1d'

# X conf for a single screen server with fake CRT attached

XORG_CONF = """Section "ServerLayout"

Identifier "Layout0"

Screen 0 "Screen0" 0 0

EndSection

Section "Screen"

Identifier "Screen0"

Device "VideoCard0"

Monitor "Monitor0"

DefaultDepth 8

Option "UseDisplayDevice" "DFP-0"

Option "ConnectedMonitor" "DFP-0"

Option "CustomEDID" "DFP-0:{edid}"

Option "Coolbits" "20"

SubSection "Display"

Depth 8

Modes "160x200"

EndSubSection

EndSection

Section "ServerFlags"

Option "AllowEmptyInput" "on"

Option "Xinerama" "off"

Option "SELinux" "off"

EndSection

Section "Device"

Identifier "Videocard0"

Driver "nvidia"

Screen 0

Option "UseDisplayDevice" "DFP-0"

Option "ConnectedMonitor" "DFP-0"

Option "CustomEDID" "DFP-0:{edid}"

Option "Coolbits" "29"

BusID "PCI:{bus}"

EndSection

Section "Monitor"

Identifier "Monitor0"

Vendorname "Dummy Display"

Modelname "160x200"

#Modelname "1024x768"

EndSection

"""

from tempfile import mkdtemp

import os

import re

bus = input("bus:")

def decimalize(bus):

"""Converts a bus ID to an xconf-friendly format by dropping the domain and converting each hex component to

decimal"""

return ':'.join([str(int('0x' + p, 16)) for p in re.split('[:.]', bus[9:])])

"""Writes out the X server config for a GPU to a temporary directory"""

tempdir = mkdtemp(prefix='cool-gpu-' + bus)

edid = os.path.join(tempdir, 'edid.bin')

conf = os.path.join(tempdir, 'xorg.conf')

with open(edid, 'wb') as e, open(conf, 'w') as c:

e.write(EDID)

c.write(XORG_CONF.format(edid=edid, bus=decimalize(bus)))

print(conf)View Code

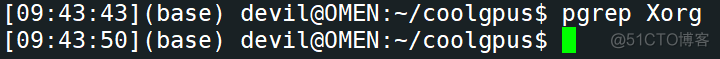

sudo service gdm3 stop

pgrep Xorg 证明X11进程被kill

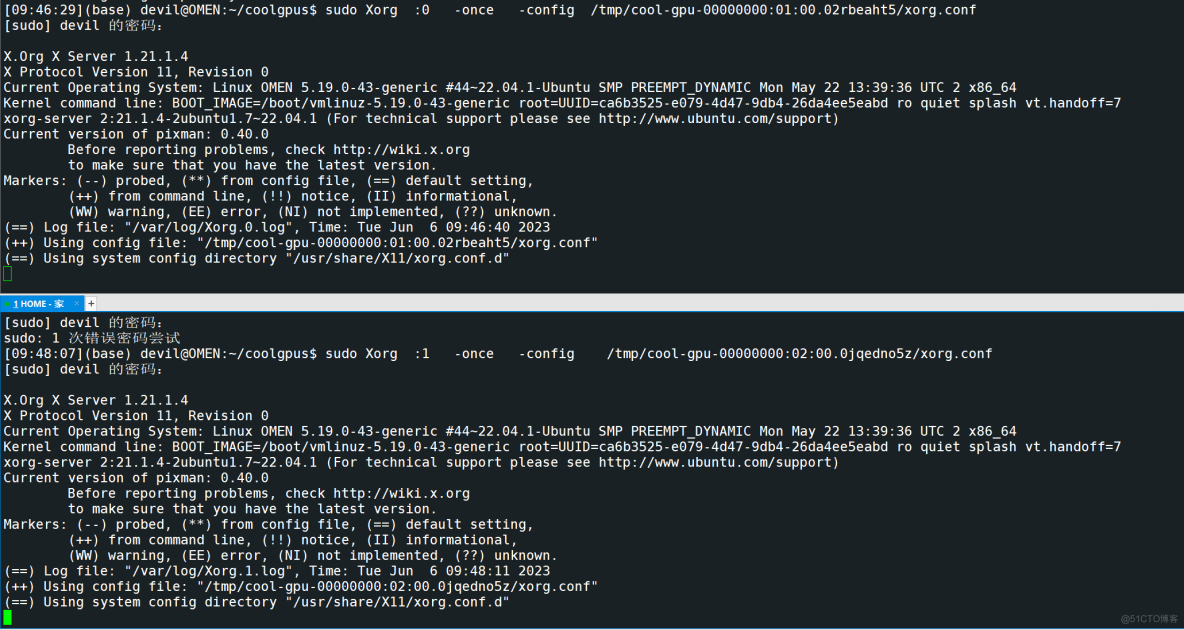

分别为两个显卡指定一个虚拟屏幕,并各自启动虚拟的X11进程:

这里要注意为不同显卡指定不同的虚拟屏幕号:

sudo Xorg :0 -once -config /tmp/cool-gpu-00000000:01:00.02rbeaht5/xorg.conf

sudo Xorg :1 -once -config /tmp/cool-gpu-00000000:02:00.0jqedno5z/xorg.conf

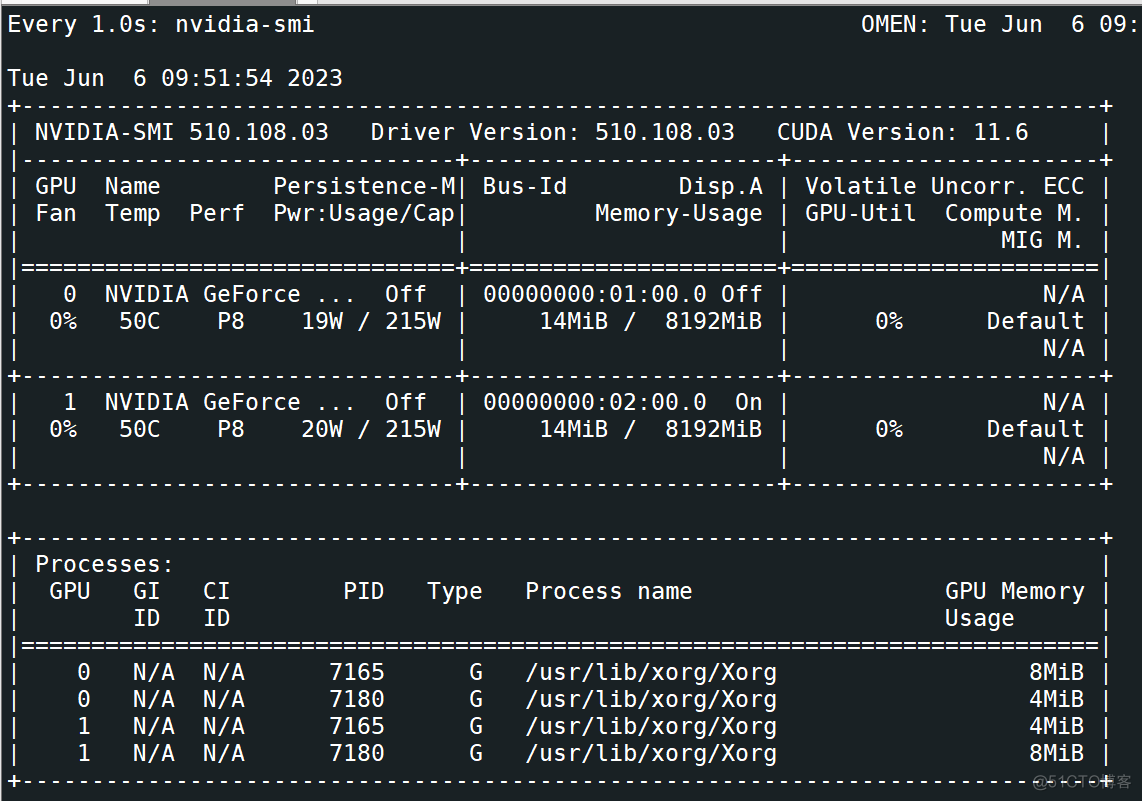

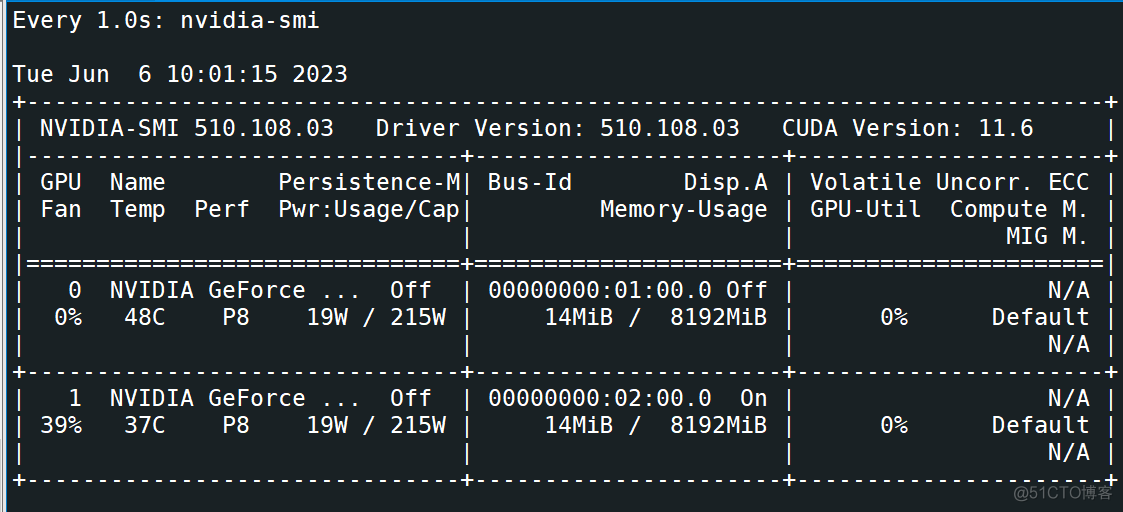

可以看到 7165 进程是0号显卡上的虚拟X11进程,同时也会在1号上占用一定显存;7180 进程是1号显卡上的虚拟X11进程,同时也会在0号上占用一定显存;

由于每块显卡都是有一个运行的X11进行虚拟屏幕,因此每块显卡在自己的显示进程中都是0号显卡,风扇也都是0号风扇,因此使用nvidia命令时通过屏幕号来区分:

对 0号 虚拟屏幕对应的显卡进行设置:

nvidia-settings -a [gpu:0]/GPUFanControlState=1 -c :0

设置转速50%:

nvidia-settings -a [fan:0]/GPUTargetFanSpeed=50 -c :0

关闭手动设置模式,恢复自动模式:

nvidia-settings -a [gpu:0]/GPUFanControlState=0 -c :0

对 1号 虚拟屏幕对应的显卡进行设置:

nvidia-settings -a [gpu:0]/GPUFanControlState=1 -c :1

设置转速50%:

nvidia-settings -a [fan:0]/GPUTargetFanSpeed=50 -c :1

关闭手动设置模式,恢复自动模式:

nvidia-settings -a [gpu:0]/GPUFanControlState=0 -c :1

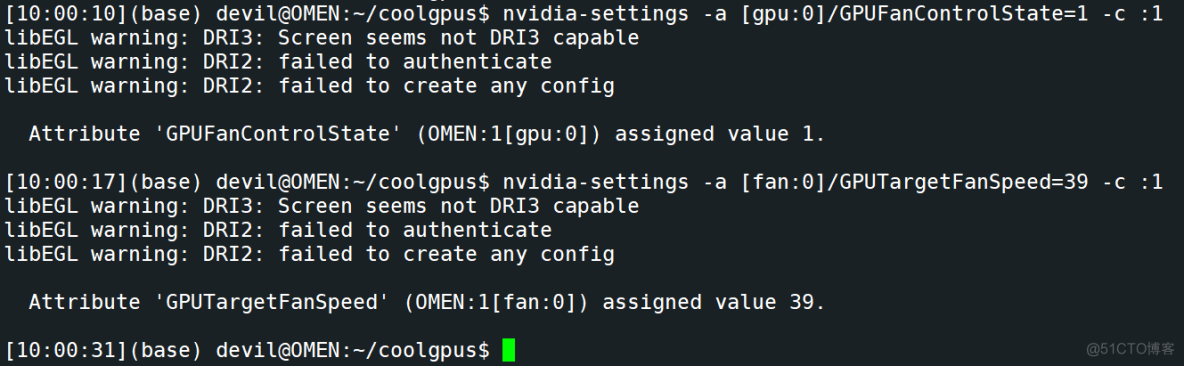

例子: 对1号显卡风扇设置:

完全实现了指定转速:

====================================================

PS:

使用过程中发现,有的时候恢复自动设置转速后nvidia-smi中的风扇转速不变化,保持为恢复时的数值,但是实际上转速已经恢复自动控制,关于这个偶尔出现的恢复自动调速后转速显示不更新的问题没有什么法子,不过由于是偶尔发生,也不太影响使用。