ELK监控IIS-Web日志+Grafana展示

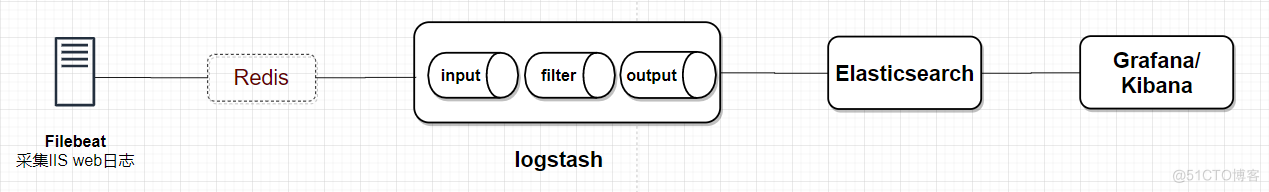

这里介绍使用Filebeat+Logstash+Grafana展示IIS-Web日志,由于数据量不大,没有采用Redis缓存,数据由Featbeat直接传输到Logstash进行数据过滤,再发送到Elasticsearch存储,使用Grafana数据展示

组件如下:

- Filebeat内的轻量化采集组件,可以方便采集各种日志,可参考Featbeat官方文档

- Logstash:数据过滤组件,里面有丰富的插件,主要包括三个模块:Input(数据输入),Filter(数据过滤),Output(数据输出),可参考Logstash官方文档

- Elasticserch:全文索引搜索+存储引擎,java写的,暂未详细了解,可参考Elasticsearch官方文档

- **Kibana:**可视化平台,可展示、检索、管理Elasticsearch中的数据。参考Kibana官方文档

- **Grafana:**可视化平台,能接入不同的数据源,进行数据图表展示,由于比较熟悉grafana,这里采用grafana进行展示。参考Grafana官方文档

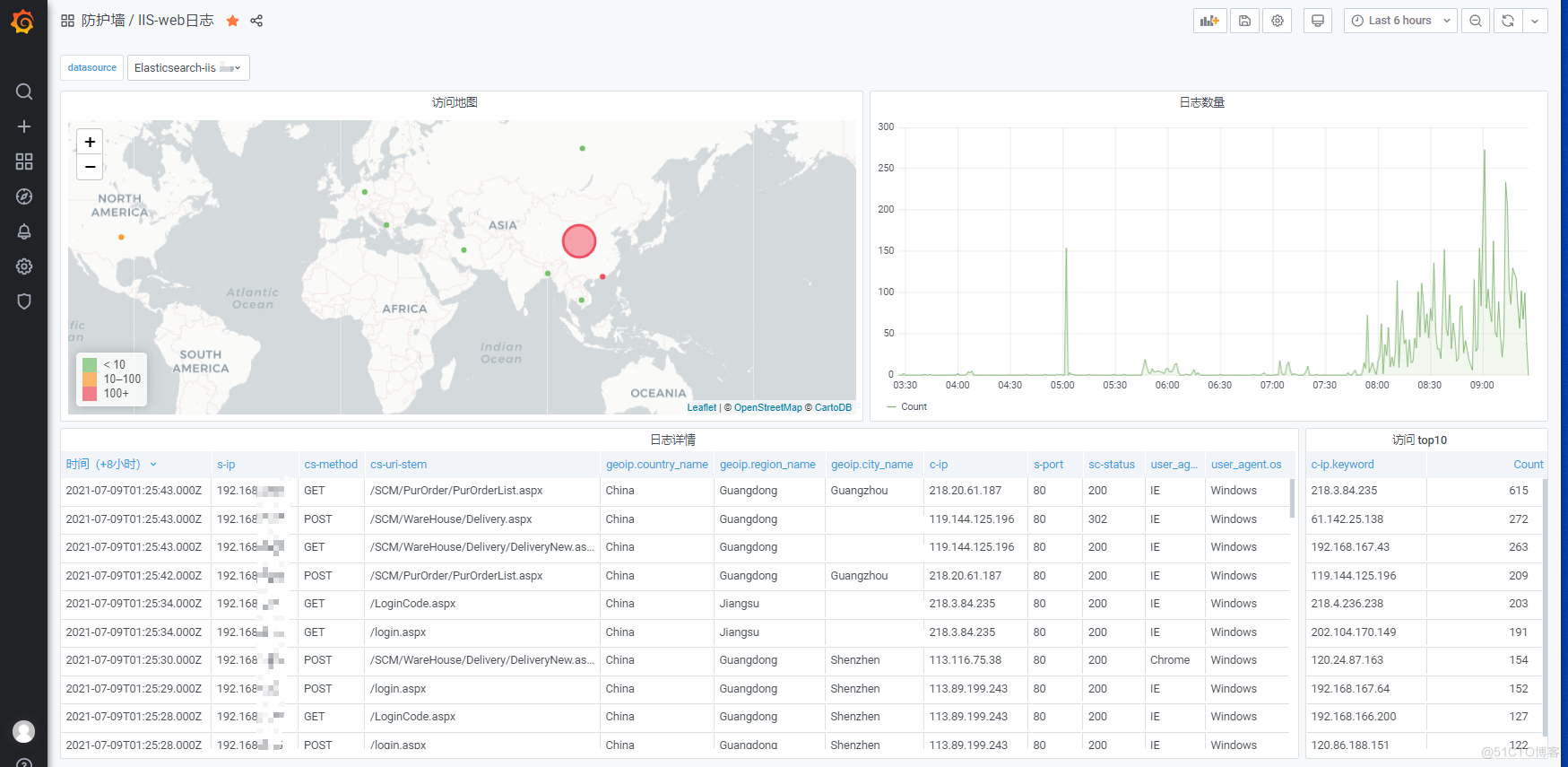

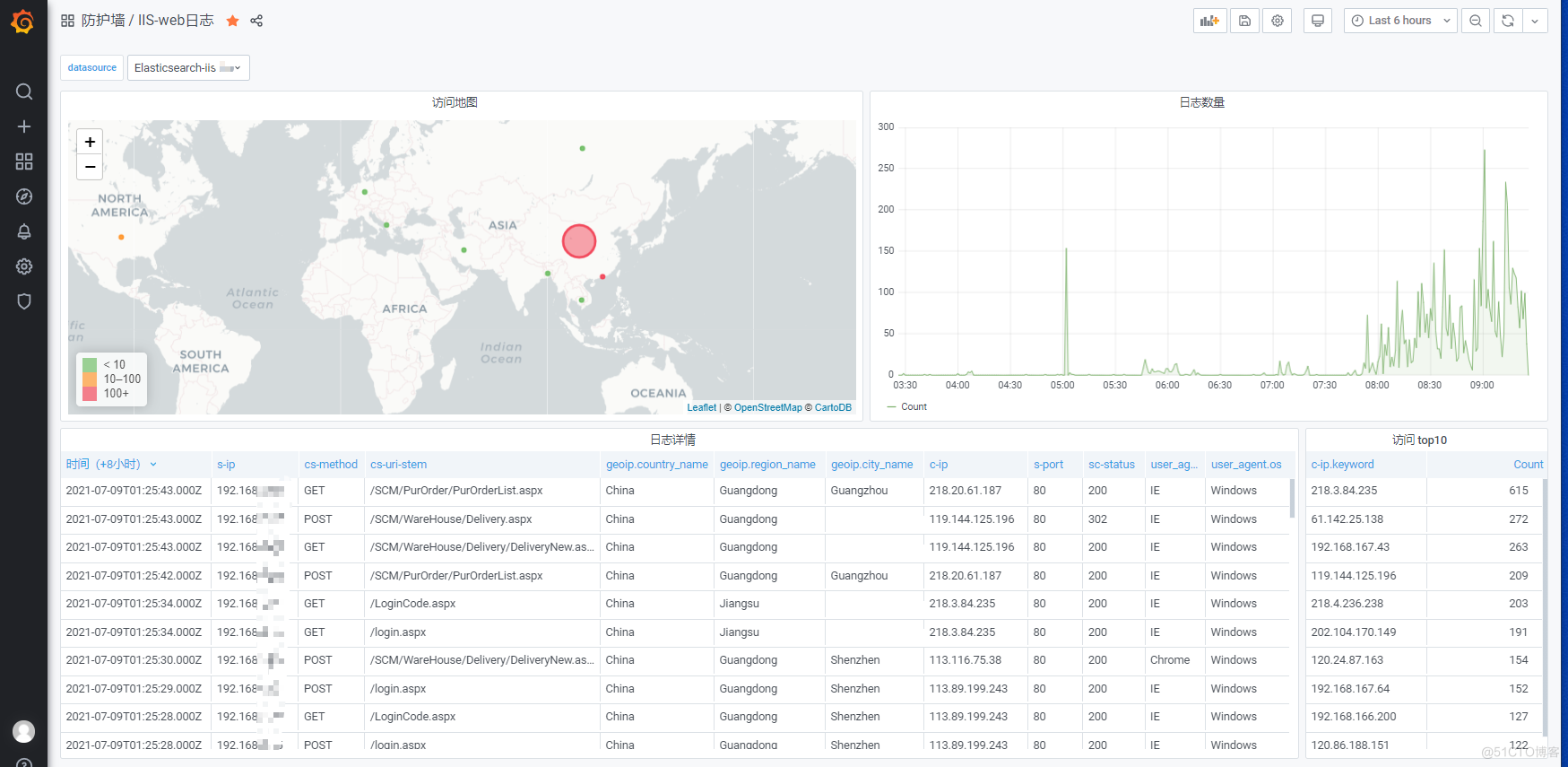

Grafana效果展示

一、安装ELK

参考 Elasticsearch+Logstash+Kibana+Head安装

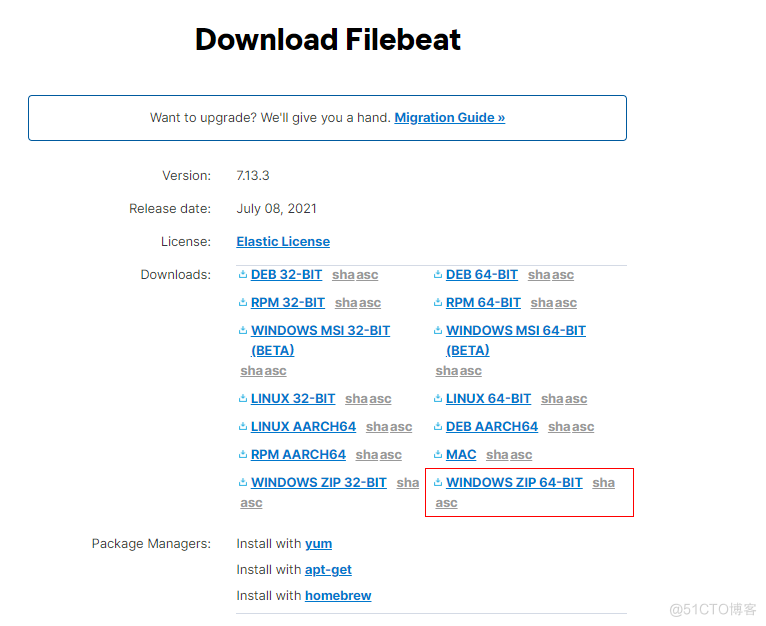

二、安装Filebeat

下载Filebeat

修改filebeat.yml

# ============================== Filebeat inputs ===============================

filebeat.inputs:

# Each - is an input. Most options can be set at the input level, so

# you can use different inputs for various configurations.

# Below are the input specific configurations.

#-------------------- input 输入1 ---------------------------

- type: log

# 开启输入

enabled: true

# 日志路径

paths:

- C:\inetpub\logs\LogFiles\W3SVC1\u_ex*

#出去#开头行

exclude_lines: ['^#']

#type区分不同web网站日志

fields:

type: 'filebeat-iis-25'

fields_under_root: true

### 如果日志是多行的,如java日志,需要开启以下3行

#multiline.pattern: ^\[

#multiline.negate: false

#multiline.match: after

#-------------------- input 输入2 ---------------------------

- type: log

enabled: true

paths:

- C:\inetpub\logs\LogFiles\W3SVC2\u_ex*

exclude_lines: ['^#']

fields:

type: 'filebeat-iis-27'

fields_under_root: true

# ============================== Filebeat modules ==============================

filebeat.config.modules:

# Glob pattern for configuration loading

path: ${path.config}/modules.d/*.yml

# Set to true to enable config reloading

reload.enabled: false

# ======================= Elasticsearch template setting =======================

setup.template.settings:

index.number_of_shards: 1

# ================================== General ===================================

#fields:

# env: staging

# =================================== Kibana ===================================

setup.kibana:

#host: "192.168.0.170:5601"

#username: "elastic"

#password: "123456"

# ================================== Outputs ===================================

# ---------------------------- Elasticsearch Output ----------------------------

#output.elasticsearch:

# hosts: ["192.168.0.170:9200"]

# username: "elastic"

# password: "123456"

# ------------------------------ Logstash Output -------------------------------

output.logstash:

hosts: ["192.168.0.170:5044"]

# ================================= Processors =================================

processors:

- add_host_metadata:

when.not.contains.tags: forwarded

- add_cloud_metadata: ~

- add_docker_metadata: ~

- add_kubernetes_metadata: ~

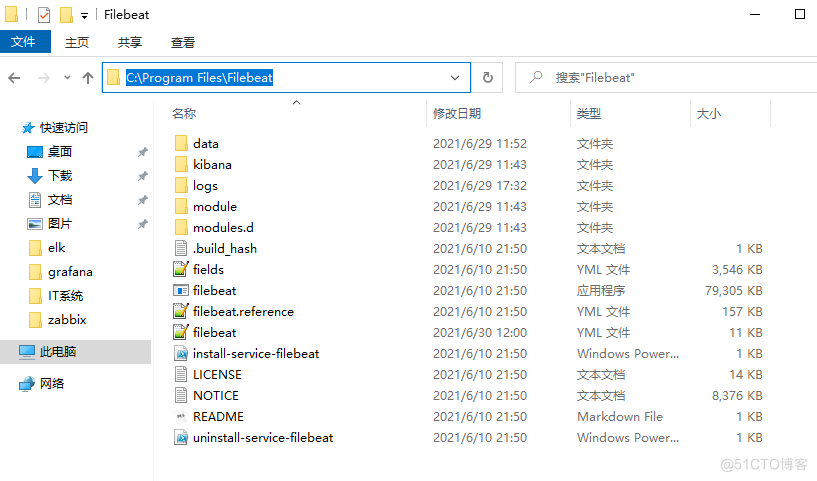

文件夹移动至C:\Program Files,并重命名为Filebeat

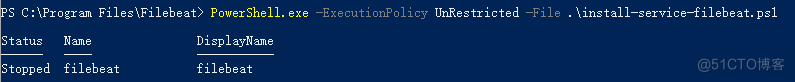

管理员打开powershell

PS C:\Windows\system32> cd 'C:\Program Files\Filebeat'

PS C:\Program Files\Winlogbeat> .\install-service-filebeat.ps1

安装完成后提示

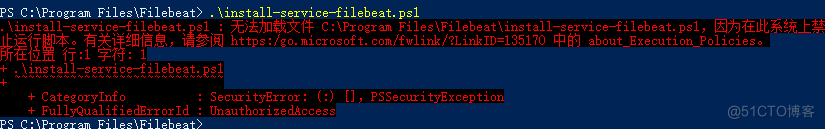

如果弹出无法安装提示

需要运行以下命令安装

PowerShell.exe -ExecutionPolicy UnRestricted -File .\install-service-filebeat.ps1

测试配置文件

.\filebeat.exe setup -e

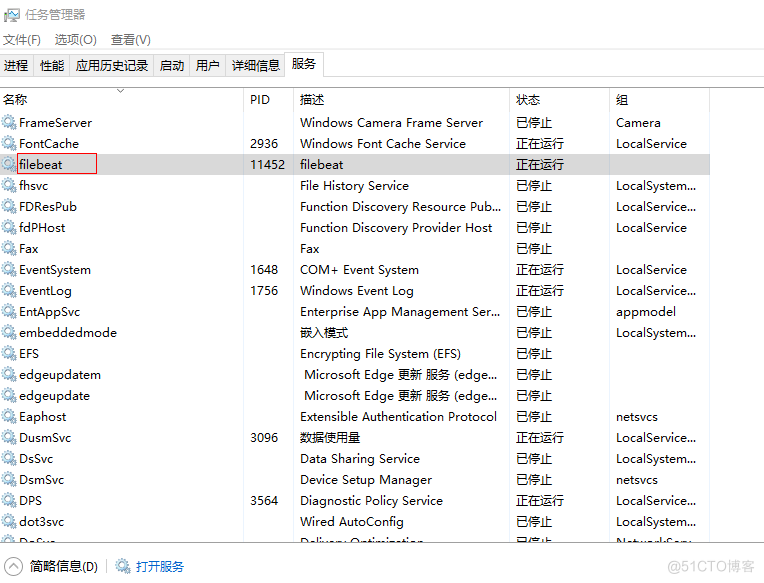

开启服务

Start-Service filebeat

三、配置Logstash

vim /opt/logstash/config/iis_log.conf

#-------------------输入------------------------

input{

beats {

host => "0.0.0.0"

port => 5044

}

}

#-------------------过滤-----------------------

filter {

if [type] =~ "filebeat-iis-.*" {

#删除以#开头的注释日志

if [message] =~ "^#" {

drop {}

}

grok {

#grok内的match,匹配字端信息,内置了很多正则表达式,可直接使用

match => ["message", "%{TIMESTAMP_ISO8601:log_timestamp} (%{IPORHOST:s-ip}|-) (%{WORD:cs-method}|-) %{NOTSPACE:cs-uri-stem} %{NOTSPACE:cs-uri-query} (%{NUMBER:s-port}|-) (%{NOTSPACE:c-username}|-) (%{IPORHOST:c-ip}|-) %{NOTSPACE:cs-useragent} %{NOTSPACE:cs-referer} (%{NUMBER:sc-status}|-) (%{NUMBER:sc-substatus}|-) (%{NUMBER:sc-win32-status}|-) (%{NUMBER:time-taken}|-)"]

}

#grok内的date,修正时间

date {

match => ["log_timestamp","yyyy-MM-dd HH:mm:ss"]

# 由于IIS日志默认采用的是格林尼治时区,故时区要选择"Etc/UTC"

timezone => "Etc/UTC"

target => "@timestamp"

}

#grok内的mutate,删除字段

mutate {

remove_field => ["@version","message","host","agent","ecs","cs-uri-query"]

#remove_field => ["log_timestamp"]

}

#grok内的useragent插件,解析客户端信息

useragent {

source=> "cs-useragent"

prefix => "user_agent."

remove_field => "cs-useragent"

}

#grok内的geoip,解析IP地址位置

geoip {

source => "c-ip"

}

}

}

#------------------- 输出 -----------------------

output {

#不同的iis服务器日志放到不同的索引中

if [type] == "filebeat-iis-25" {

elasticsearch {

hosts => ["192.168.0.170:9200"]

user => "elastic"

password => "123456"

index => "filebeat-iis-25-%{+YYYYMMdd}"

}

}

if [type] == "filebeat-iis-27" {

elasticsearch {

hosts => ["192.168.0.170:9200"]

user => "elastic"

password => "123456"

index => "filebeat-iis-27-%{+YYYYMMdd}"

}

}

}

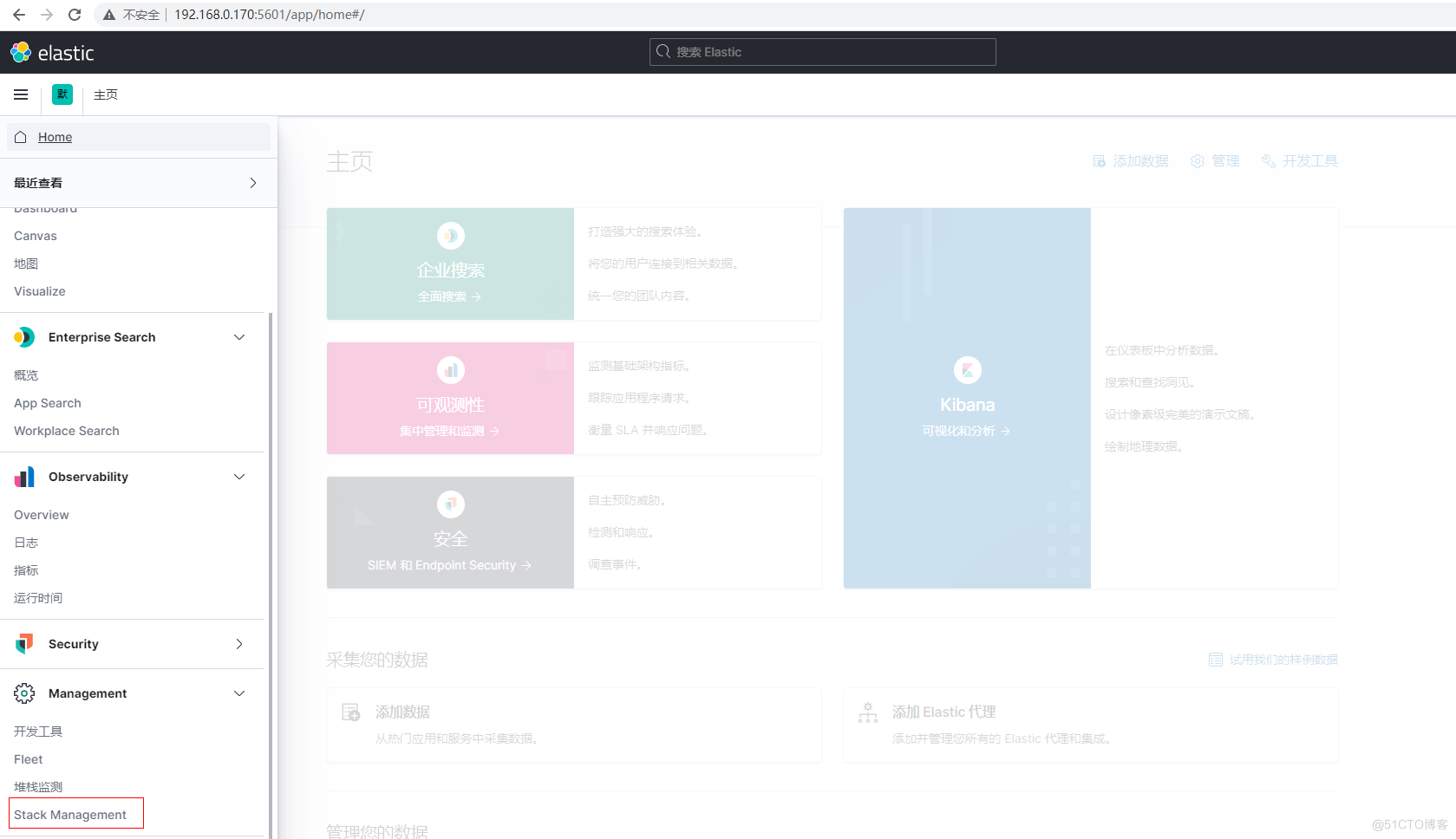

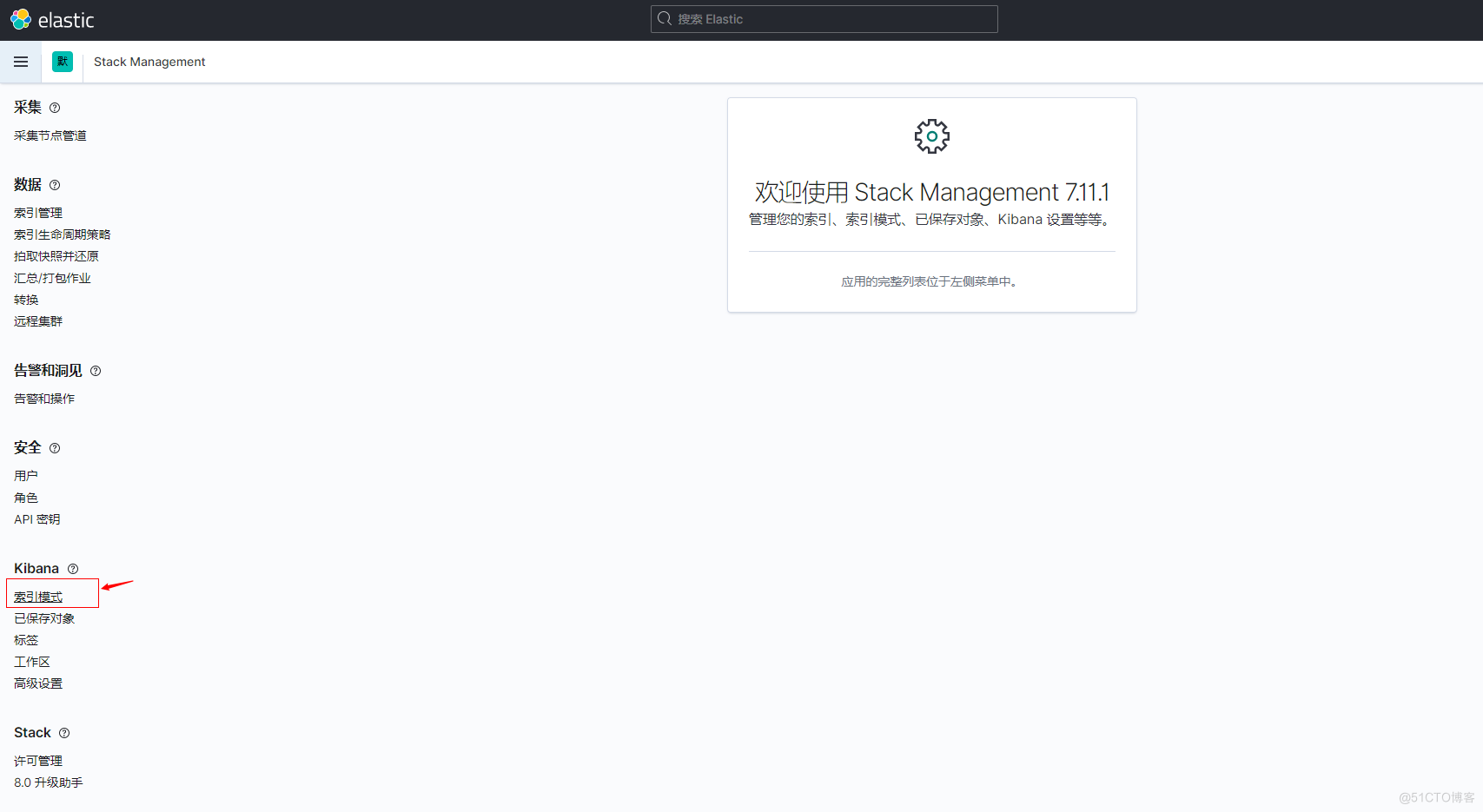

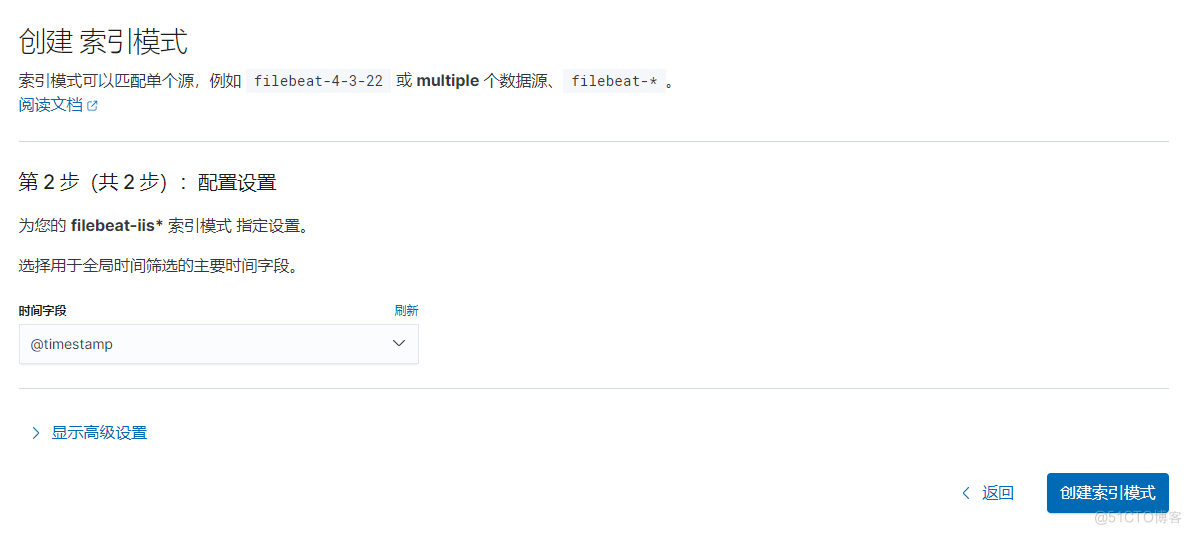

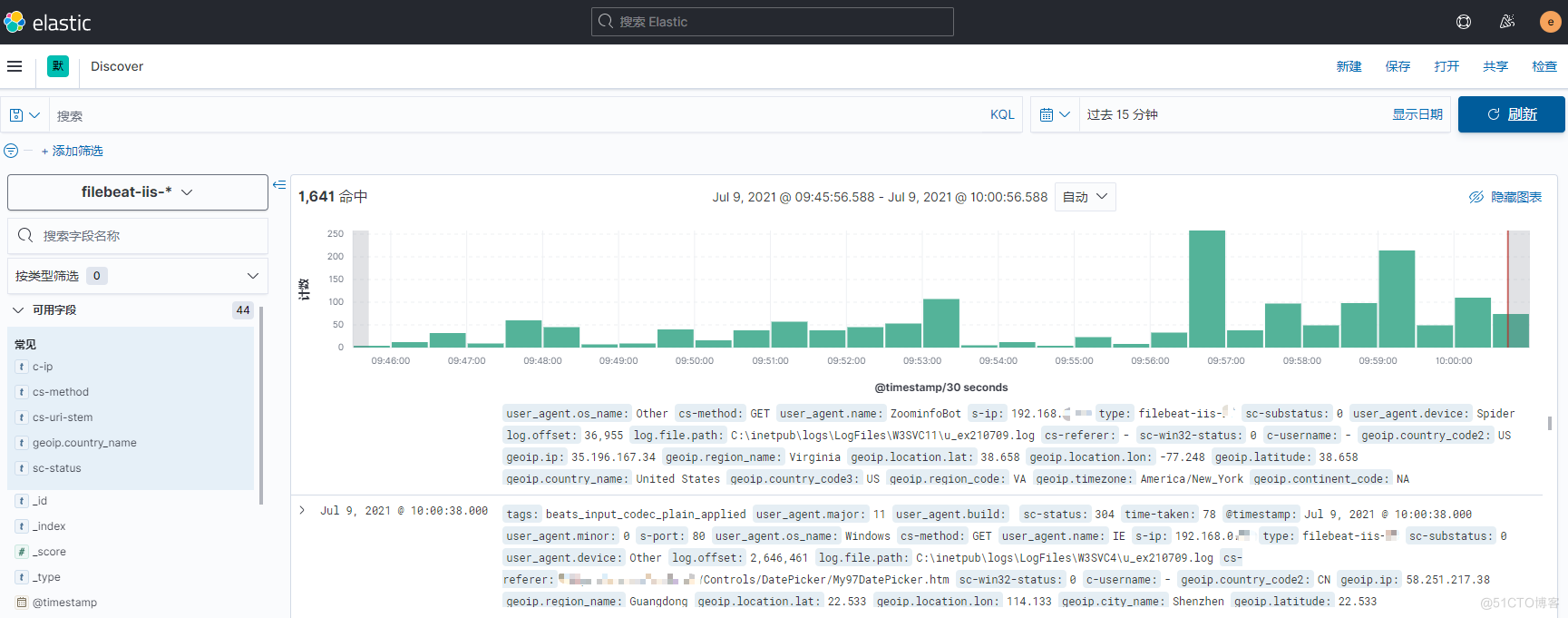

四、配置kibana

登录kibana

http://192.168.0.170:5601/

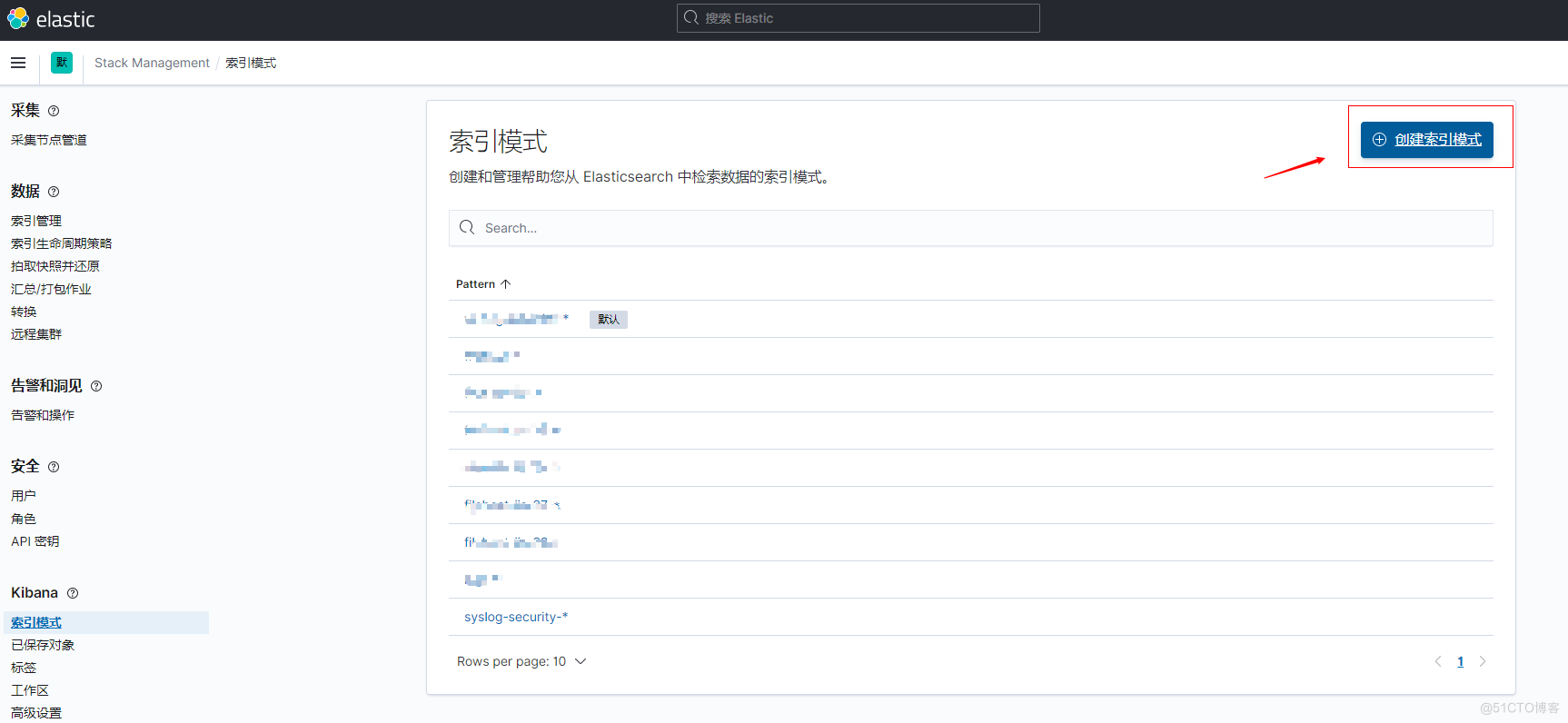

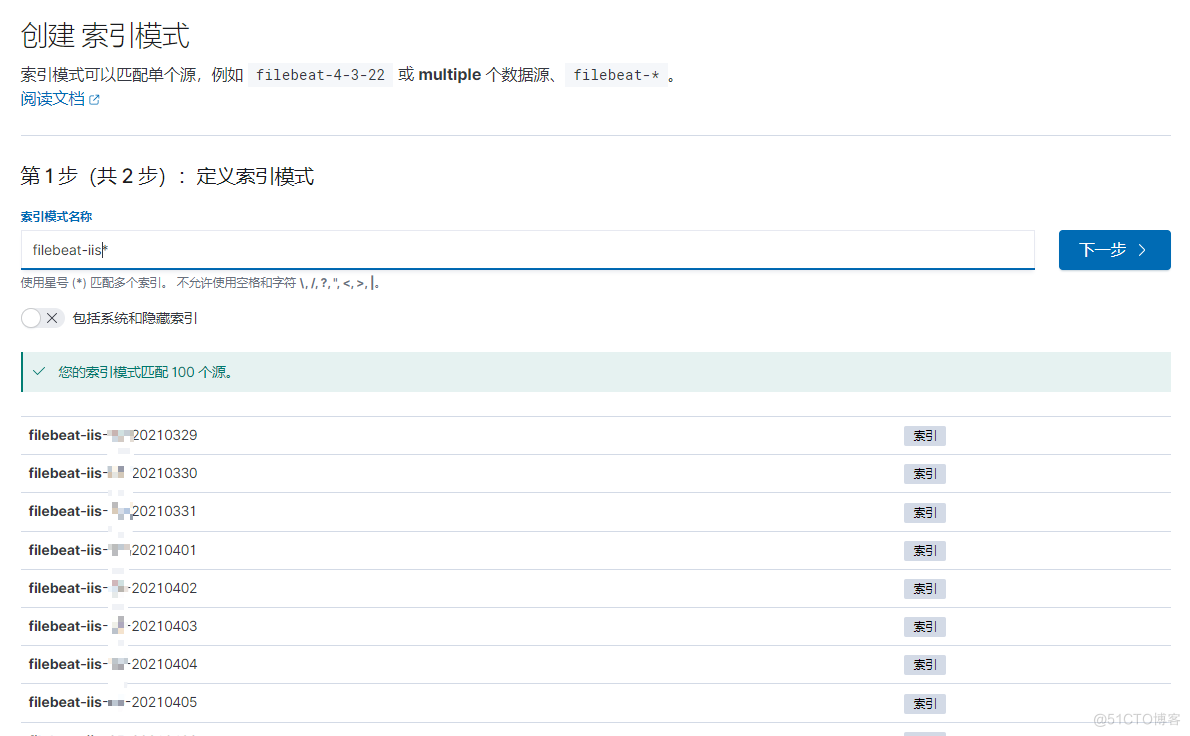

创建索引模式

创建完成后,在Discover就可以看得刚创建的索引

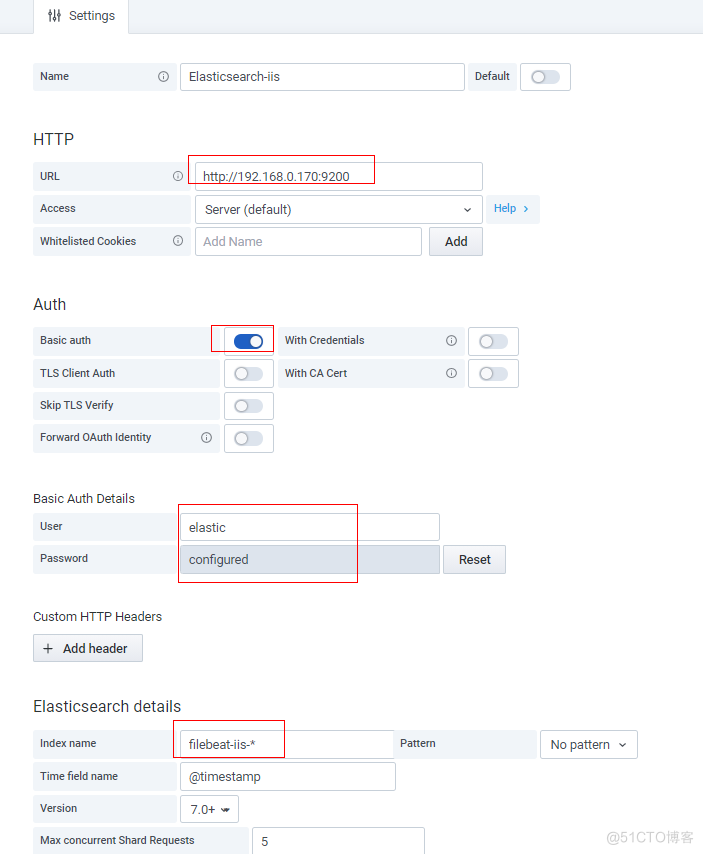

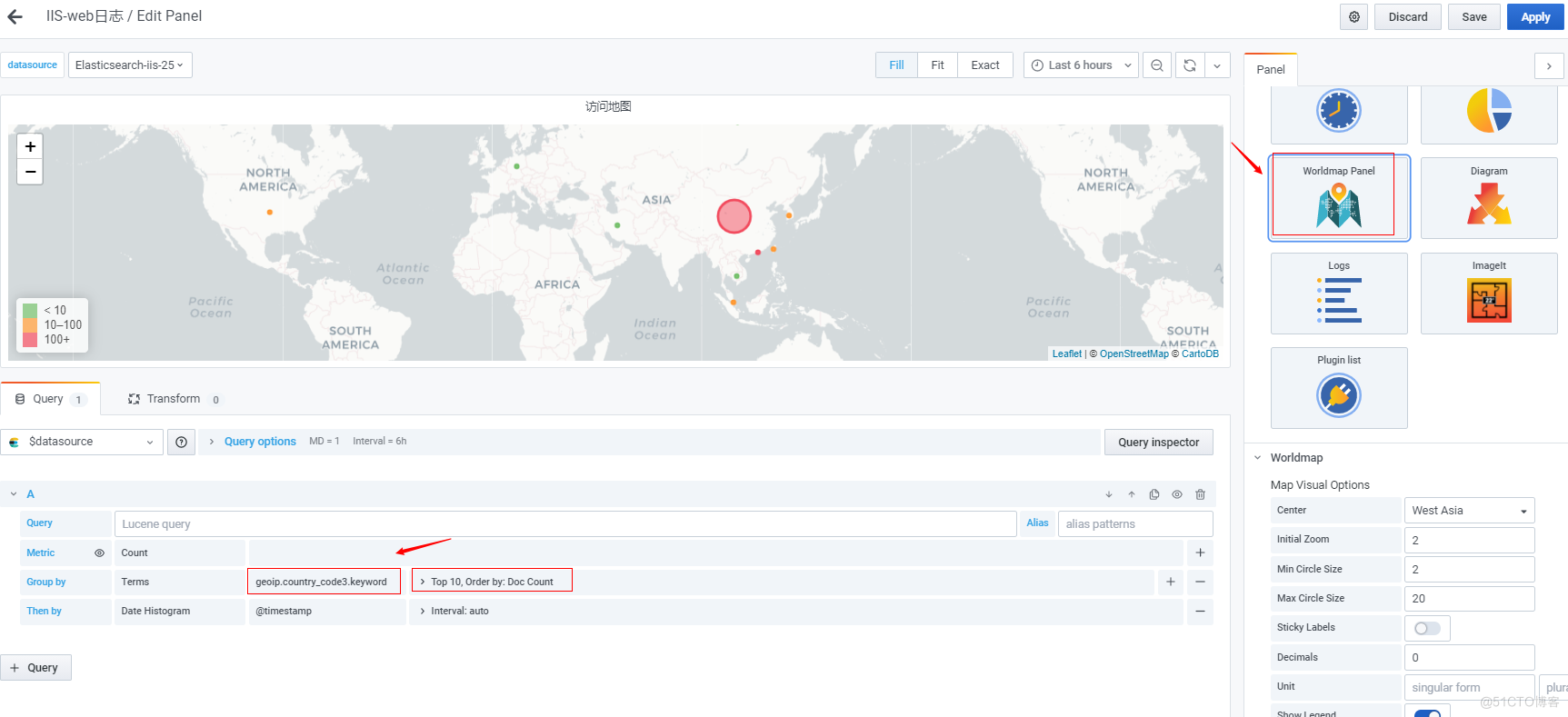

五、Grafana展示

参考 Grafana安装

添加数据源

绘制图表-地图显示

绘制图标-访问top10

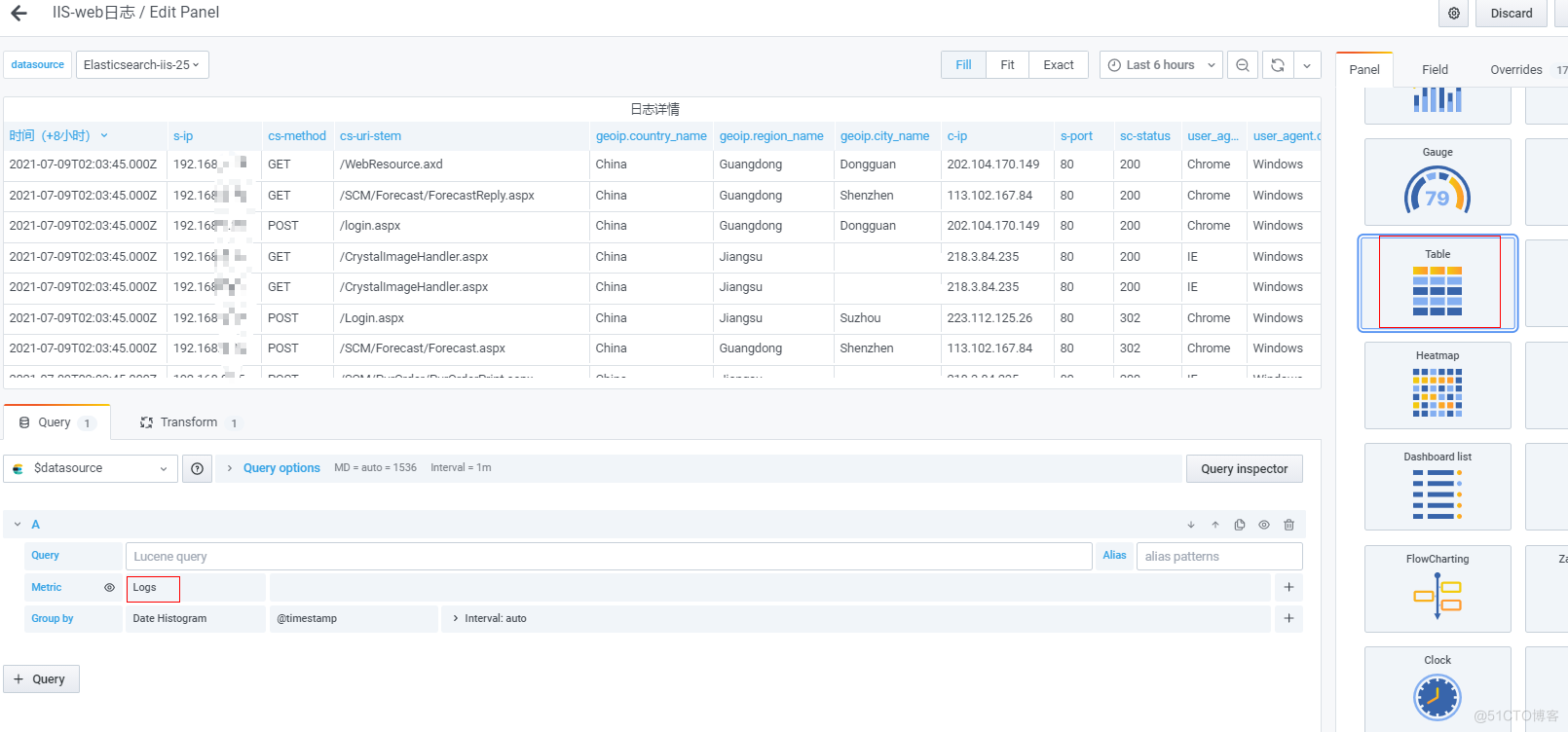

绘制图标-日志详情

其他图表类似,最后展示如下