目录

摘要:

目录

一、准备机器

二、ceph节点安装

三、搭建集群

后续:Ceph集群搭建系列(二):Ceph 集群扩容

参考:

摘要:

本文介绍的Ceph 集群搭建是基于luminous版本(ceph -v: ceph version 12.2.11 (26dc3775efc7bb286a1d6d66faee0ba30ea23eee) luminous (stable)),ceph各个版本会有不同。

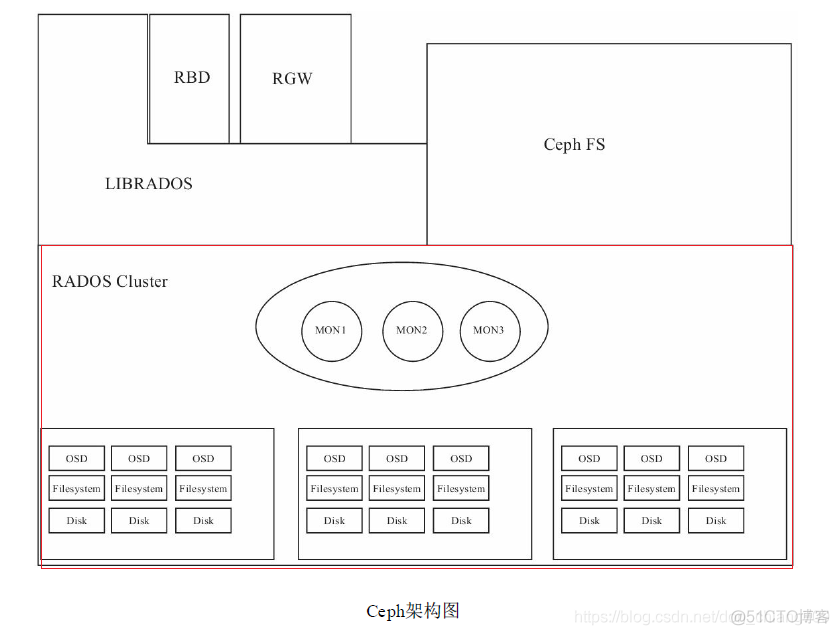

另外我是基于ubuntu物理机(后文提到的admin-node节点)上创建了3个CentOS VM(后文提到的node1/2/3节点)来搭建Ceph集群的。先上个图来看看我们要搭建的Ceph集群是属于Ceph架构图的哪一部分? 它对应下图红色方框里的RADOS Cluster

目录

- 一、准备机器

- 二、ceph节点安装

- 三、搭建集群

一、准备机器

本文描述如何在 一台物理机Ubuntu + 三台虚拟机VM CentOS 7 下搭建 Ceph 存储集群(STORAGE CLUSTER)。

一共4台机器,其中1个是管理节点,其他3个是ceph节点:

hostname | ip | role | 描述 |

admin-node | 10.38.50.131 | ceph-deploy | 管理节点(物理机Ubuntu) |

node1 | 192.168.122.157 | mon.node1 | ceph节点,监控节点(虚拟机VM CentOS 7) |

node2 | 192.168.122.158 | osd.0 | ceph节点,OSD节点(虚拟机VM CentOS 7) |

node3 | 192.168.122.159 | osd.1 | ceph节点,OSD节点(虚拟机VM CentOS 7) |

管理节点:admin-node

ceph节点:node1, node2, node3

所有节点:admin-node, node1, node2, node3

1. 修改主机名

# vi /etc/hostname2. 修改hosts文件

# vi /etc/hosts10.38.50.131 admin-node

192.168.122.157 node1

192.168.122.158 node2

192.168.122.159 node33. 确保联通性(管理节点)

用 ping 短主机名( hostname -s )的方式确认网络联通性。解决掉可能存在的主机名解析问题。

$ ping node1

$ ping node2

$ ping node3

二、ceph节点安装

1. 安装NPT(所有节点)

我们建议在所有 Ceph 节点上安装 NTP 服务(特别是 Ceph Monitor 节点),以免因时钟漂移导致故障,详情见时钟。

sudo yum install ntp ntpdate ntp-docUbuntu下:

sudo apt-get install ntp ntpdate ntp-doc

2. 安装SSH(所有节点)

sudo yum install openssh-server3. 创建部署 CEPH 的用户(所有节点)

ceph-deploy 工具必须以普通用户登录 Ceph 节点,且此用户拥有无密码使用 sudo 的权限,因为它需要在安装软件及配置文件的过程中,不必输入密码。

建议在集群内的所有 Ceph 节点上给 ceph-deploy 创建一个特定的用户,但不要用 “ceph” 这个名字。

1) 在各 Ceph 节点创建新用户

sudo useradd -d /home/yjiang2 -m yjiang2

sudo passwd yjiang22) 确保各 Ceph 节点上新创建的用户都有 sudo 权限

echo "yjiang2 ALL = (root) NOPASSWD:ALL" | sudo tee /etc/sudoers.d/yjiang2

sudo chmod 0440 /etc/sudoers.d/yjiang24. 允许无密码SSH登录(管理节点)

因为 ceph-deploy 不支持输入密码,你必须在管理节点上生成 SSH 密钥并把其公钥分发到各 Ceph 节点。 ceph-deploy 会尝试给初始 monitors 生成 SSH 密钥对。

1) 生成 SSH 密钥对

不要用 sudo 或 root 用户。提示 “Enter passphrase” 时,直接回车,口令即为空:

//切换用户,如不特别说明,后续的操作均在该用户下进行

# su yjiang2 //生成密钥对

$ ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/home/yjiang2/.ssh/id_rsa):

Created directory '/home/yjiang2/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/yjiang2/.ssh/id_rsa.

Your public key has been saved in /home/yjiang2/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:Tb0VpUOZtmh+QBRjUOE0n2Uy3WuoZVgXn6TBBb2SsGk yjiang2@admin-node

The key's randomart image is:

+---[RSA 2048]----+

| .+@=OO*|

| *.BB@=|

| ..O+Xo+|

| o E+O.= |

| S oo=.o |

| .. . |

| . |

| |

| |

+----[SHA256]-----+2) 把公钥拷贝到各 Ceph 节点

ssh-copy-id yjiang2@node1

ssh-copy-id yjiang2@node2

ssh-copy-id yjiang2@node3完成后, /home/yjiang2/.ssh/ 路径下:

- admin-node 多了文件

id_rsa、id_rsa.pub和known_hosts; - node1, node2, node3 多了文件

authorized_keys。

3) 修改~/.ssh/config 文件

修改 ~/.ssh/config 文件(没有则新增),这样 ceph-deploy 就能用你所建的用户名登录 Ceph 节点了。

// 必须使用sudo

$ sudo vi ~/.ssh/configHost admin-node

Hostname admin-node

User yjiang2

Host node1

Hostname node1

User yjiang2

Host node2

Hostname node2

User yjiang2

Host node3

Hostname node3

User yjiang24) 测试ssh能否成功

$ ssh yjiang2@node1

$ exit

$ ssh yjiang2@node2

$ exit

$ ssh yjiang2@node3

$ exit

- 问题:如果出现 "Bad owner or permissions on /home/yjiang2/.ssh/config",执行命令修改文件权限。

$ sudo chmod 644 ~/.ssh/config

5. 系统引导时联接网络(ceph节点:node1+node2+node3)

Ceph 的各个OSD 进程通过网络互联并向 Monitors 上报自己的状态。如果网络默认为 off,那么 Ceph 集群在启动时就不能上线,直到你打开网络。我的network interface是eth0

$sudo grep ONBOOT -rn /etc/sysconfig/network-scripts/

/etc/sysconfig/network-scripts/ifcfg-lo:8:ONBOOT=yes

/etc/sysconfig/network-scripts/ifup-ippp:22:if [ "${2}" = "boot" -a "${ONBOOT}" = "no" ]; then

/etc/sysconfig/network-scripts/ifup-plip:9:if [ "foo$2" = "fooboot" -a "${ONBOOT}" = "no" ]; then

/etc/sysconfig/network-scripts/ifup-plusb:20:if [ "foo$2" = "fooboot" -a "${ONBOOT}" = "no" ]

/etc/sysconfig/network-scripts/ifup-ppp:40:if [ "${2}" = "boot" -a "${ONBOOT}" = "no" ]; then

/etc/sysconfig/network-scripts/ifcfg-eth0:15:ONBOOT=yes

/etc/sysconfig/network-scripts/ifcfg-eth0.bak:15:ONBOOT=yes

//确保ONBOOT 设置成了 yes6. 开放所需端口(ceph节点:node1+node2+node3)

Ceph Monitors 之间默认使用 6789 端口通信, OSD 之间默认用 6800:7300 这个范围内的端口通信。Ceph OSD 能利用多个网络连接进行与客户端、monitors、其他 OSD 间的复制和心跳的通信。

$ sudo firewall-cmd --zone=public --add-port=6789/tcp --permanent

// 或者关闭防火墙

$ sudo systemctl stop firewalld

$ sudo systemctl disable firewalld7. 终端(TTY)(ceph节点:node1+node2+node3)

在 CentOS 和 RHEL 上执行 ceph-deploy 命令时可能会报错。如果你的 Ceph 节点默认设置了 requiretty ,执行

$ sudo visudo找到 Defaults requiretty 选项,把它改为 Defaults:ceph !requiretty 或者直接注释掉,这样 ceph-deploy 就可以用之前创建的用户(创建部署 Ceph 的用户 )连接了。

编辑配置文件 /etc/sudoers 时,必须用 sudo visudo 而不是文本编辑器。

8. 关闭selinux(ceph节点:node1+node2+node3)

$ sudo setenforce 0

setenforce: SELinux is disabled要使 SELinux 配置永久生效(如果它的确是问题根源),需修改其配置文件 /etc/selinux/config:

$ sudo sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config即修改 SELINUX=disabled。

9. 配置EPEL源(管理节点:admin-node)

$ sudo apt-get install -y yum-utils && sudo yum-config-manager --add-repo https://dl.fedoraproject.org/pub/epel/7/x86_64/ && sudo yum install --nogpgcheck -y epel-release && sudo rpm --import /etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7 && sudo rm /etc/yum.repos.d/dl.fedoraproject.org*10. 把软件包源加入软件库(管理节点:admin-node)

$ sudo vi /etc/yum/repos.d/ceph.repo把如下内容粘帖进去,保存到 /etc/yum.repos.d/ceph.repo 文件中。

[ceph]

name=ceph

baseurl=http://mirrors.163.com/ceph/rpm-luminous/el7/x86_64/

gpgcheck=0

[ceph-noarch]

name=cephnoarch

baseurl=http://mirrors.163.com/ceph/rpm-luminous/el7/noarch/

gpgcheck=011. 更新软件库并安装ceph-deploy(管理节点:admin-node)

$ sudo apt-get update && sudo apt-get install ceph-deploy

$ sudo apt-get install yum-plugin-priorities时间可能比较久,耐心等待。

三、搭建集群

在 管理节点 下执行如下步骤:

1. 安装准备,创建文件夹

在管理节点上创建一个目录,用于保存 ceph-deploy 生成的配置文件和密钥对。

$ cd ~

$ mkdir ceph-cluster

$ cd ceph-cluster注:若安装ceph后遇到麻烦可以使用以下命令进行清除包和配置:

// 删除安装包

$ ceph-deploy purge admin-node node1 node2 node3

// 清除配置

$ ceph-deploy purgedata admin-node node1 node2 node3

$ ceph-deploy forgetkeys2. 创建集群的监控节点

创建集群并初始化监控节点,命令格式为:$ ceph-deploy new {initial-monitor-node(s)}

这里node1是monitor节点,所以执行:

$ ceph-deploy new node1完成后,ceph-cluster 下多了3个文件:ceph.conf、ceph-deploy-ceph.log 和 ceph.mon.keyring。

- 问题:如果出现 "[ceph_deploy][ERROR ] RuntimeError: remote connection got closed, ensure

requirettyis disabled for node1",执行 sudo visudo 将 Defaults requiretty 注释掉。

3. 修改配置文件

$ cat ceph.conf内容如下:

[global]

fsid = 027d5b3c-e011-4a92-9449-c8755cd8f500

mon_initial_members = node1

mon_host = 192.168.122.157

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx执行下面命令,把 Ceph 配置文件里的默认副本数从 3 改成 2 ,这样只有两个 OSD 也可以达到 active + clean 状态。把 osd pool default size = 2 加入 [global] 段:

sed -i '$a\osd pool default size = 2' ceph.conf如果有多个网卡,可以把 public network/cluster network 写入 Ceph 配置文件的 [global] 段:

public network = {ip-address}/{netmask}

cluster network={ip-addesss}/{netmask}

<!-----以上两个网络是新增部分,默认只是添加public network,一般生产都是定义两个网络,集群网络和数据网络分开-------->4. 安装Ceph(终于到了安装Ceph的时候了,喝口水去)

在所有节点上安装ceph:

$ ceph-deploy install admin-node node1 node2 node3

- 问题:[ceph_deploy][ERROR ] RuntimeError: Failed to execute command: yum -y install epel-release

解决方法:

sudo apt-get -y remove epel-release

安装完成的log(NND终于提示安装完成了)。

~$ ceph-deploy install admin-node node1 node2 node3

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/yjiang2/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (1.5.38): /usr/bin/ceph-deploy install admin-node node1 node2 node3

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] testing : None

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f149da5ec20>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] dev_commit : None

[ceph_deploy.cli][INFO ] install_mds : False

[ceph_deploy.cli][INFO ] stable : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] adjust_repos : False

[ceph_deploy.cli][INFO ] func : <function install at 0x7f149e38f5f0>

[ceph_deploy.cli][INFO ] install_mgr : False

[ceph_deploy.cli][INFO ] install_all : False

[ceph_deploy.cli][INFO ] repo : False

[ceph_deploy.cli][INFO ] host : ['admin-node', 'node1', 'node2', 'node3']

[ceph_deploy.cli][INFO ] install_rgw : False

[ceph_deploy.cli][INFO ] install_tests : False

[ceph_deploy.cli][INFO ] repo_url : None

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] install_osd : False

[ceph_deploy.cli][INFO ] version_kind : stable

[ceph_deploy.cli][INFO ] install_common : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] dev : master

[ceph_deploy.cli][INFO ] nogpgcheck : False

[ceph_deploy.cli][INFO ] local_mirror : None

[ceph_deploy.cli][INFO ] release : None

[ceph_deploy.cli][INFO ] install_mon : False

[ceph_deploy.cli][INFO ] gpg_url : None

[ceph_deploy.install][DEBUG ] Installing stable version jewel on cluster ceph hosts admin-node node1 node2 node3

[ceph_deploy.install][DEBUG ] Detecting platform for host admin-node ...

[admin-node][DEBUG ] connection detected need for sudo

[sudo] password for yjiang2:

[admin-node][DEBUG ] connected to host: admin-node

[admin-node][DEBUG ] detect platform information from remote host

[admin-node][DEBUG ] detect machine type

[ceph_deploy.install][INFO ] Distro info: Ubuntu 18.04 bionic

[admin-node][INFO ] installing Ceph on admin-node

[admin-node][INFO ] Running command: sudo env DEBIAN_FRONTEND=noninteractive DEBIAN_PRIORITY=critical apt-get --assume-yes -q --no-install-recommends install ca-certificates apt-transport-https

[admin-node][DEBUG ] Reading package lists...

[admin-node][DEBUG ] Building dependency tree...

[admin-node][DEBUG ] Reading state information...

[admin-node][DEBUG ] ca-certificates is already the newest version (20180409).

[admin-node][DEBUG ] apt-transport-https is already the newest version (1.6.11).

[admin-node][DEBUG ] The following packages were automatically installed and are no longer required:

[admin-node][DEBUG ] linux-headers-4.15.0-50 linux-headers-4.15.0-50-generic

[admin-node][DEBUG ] linux-headers-4.18.0-15 linux-headers-4.18.0-15-generic

[admin-node][DEBUG ] linux-image-4.15.0-50-generic linux-image-4.18.0-15-generic

[admin-node][DEBUG ] linux-modules-4.15.0-50-generic linux-modules-4.18.0-15-generic

[admin-node][DEBUG ] linux-modules-extra-4.15.0-50-generic linux-modules-extra-4.18.0-15-generic

[admin-node][DEBUG ] Use 'sudo apt autoremove' to remove them.

[admin-node][DEBUG ] 0 upgraded, 0 newly installed, 0 to remove and 69 not upgraded.

[admin-node][INFO ] Running command: sudo env DEBIAN_FRONTEND=noninteractive DEBIAN_PRIORITY=critical apt-get --assume-yes -q update

[admin-node][DEBUG ] Get:1 http://security.ubuntu.com/ubuntu bionic-security InRelease [88.7 kB]

[admin-node][DEBUG ] Hit:2 http://cn.archive.ubuntu.com/ubuntu bionic InRelease

[admin-node][DEBUG ] Hit:3 http://download.ceph.com/debian-luminous bionic InRelease

[admin-node][DEBUG ] Get:4 http://cn.archive.ubuntu.com/ubuntu bionic-updates InRelease [88.7 kB]

[admin-node][DEBUG ] Hit:5 http://cn.archive.ubuntu.com/ubuntu bionic-backports InRelease

[admin-node][DEBUG ] Fetched 177 kB in 2s (78.8 kB/s)

[admin-node][DEBUG ] Reading package lists...

[admin-node][INFO ] Running command: sudo env DEBIAN_FRONTEND=noninteractive DEBIAN_PRIORITY=critical apt-get --assume-yes -q --no-install-recommends install ceph ceph-osd ceph-mds ceph-mon radosgw

[admin-node][DEBUG ] Reading package lists...

[admin-node][DEBUG ] Building dependency tree...

[admin-node][DEBUG ] Reading state information...

[admin-node][DEBUG ] ceph is already the newest version (12.2.11-0ubuntu0.18.04.2).

[admin-node][DEBUG ] ceph-mon is already the newest version (12.2.11-0ubuntu0.18.04.2).

[admin-node][DEBUG ] ceph-osd is already the newest version (12.2.11-0ubuntu0.18.04.2).

[admin-node][DEBUG ] radosgw is already the newest version (12.2.11-0ubuntu0.18.04.2).

[admin-node][DEBUG ] ceph-mds is already the newest version (12.2.11-0ubuntu0.18.04.2).

[admin-node][DEBUG ] The following packages were automatically installed and are no longer required:

[admin-node][DEBUG ] linux-headers-4.15.0-50 linux-headers-4.15.0-50-generic

[admin-node][DEBUG ] linux-headers-4.18.0-15 linux-headers-4.18.0-15-generic

[admin-node][DEBUG ] linux-image-4.15.0-50-generic linux-image-4.18.0-15-generic

[admin-node][DEBUG ] linux-modules-4.15.0-50-generic linux-modules-4.18.0-15-generic

[admin-node][DEBUG ] linux-modules-extra-4.15.0-50-generic linux-modules-extra-4.18.0-15-generic

[admin-node][DEBUG ] Use 'sudo apt autoremove' to remove them.

[admin-node][DEBUG ] 0 upgraded, 0 newly installed, 0 to remove and 69 not upgraded.

[admin-node][INFO ] Running command: sudo ceph --version

[admin-node][DEBUG ] ceph version 12.2.11 (26dc3775efc7bb286a1d6d66faee0ba30ea23eee) luminous (stable)

[ceph_deploy.install][DEBUG ] Detecting platform for host node1 ...

[node1][DEBUG ] connection detected need for sudo

[node1][DEBUG ] connected to host: node1

[node1][DEBUG ] detect platform information from remote host

[node1][DEBUG ] detect machine type

[ceph_deploy.install][INFO ] Distro info: CentOS Linux 7.6.1810 Core

[node1][INFO ] installing Ceph on node1

[node1][INFO ] Running command: sudo yum clean all

[node1][DEBUG ] Loaded plugins: fastestmirror, langpacks

[node1][DEBUG ] Cleaning repos: base centos-ceph-luminous extras updates

[node1][DEBUG ] Cleaning up list of fastest mirrors

[node1][INFO ] Running command: sudo yum -y install ceph ceph-radosgw

[node1][DEBUG ] Loaded plugins: fastestmirror, langpacks

[node1][DEBUG ] Determining fastest mirrors

[node1][DEBUG ] * base: mirrors.aliyun.com

[node1][DEBUG ] * extras: mirrors.aliyun.com

[node1][DEBUG ] * updates: mirrors.aliyun.com

[node1][DEBUG ] Resolving Dependencies

[node1][DEBUG ] --> Running transaction check

[node1][DEBUG ] ---> Package ceph.x86_64 2:12.2.11-0.el7 will be installed

[node1][DEBUG ] --> Processing Dependency: ceph-osd = 2:12.2.11-0.el7 for package: 2:ceph-12.2.11-0.el7.x86_64

[node1][DEBUG ] --> Processing Dependency: ceph-mon = 2:12.2.11-0.el7 for package: 2:ceph-12.2.11-0.el7.x86_64

[node1][DEBUG ] --> Processing Dependency: ceph-mgr = 2:12.2.11-0.el7 for package: 2:ceph-12.2.11-0.el7.x86_64

[node1][DEBUG ] --> Processing Dependency: ceph-mds = 2:12.2.11-0.el7 for package: 2:ceph-12.2.11-0.el7.x86_64

[node1][DEBUG ] ---> Package ceph-radosgw.x86_64 2:12.2.11-0.el7 will be installed

[node1][DEBUG ] --> Processing Dependency: ceph-selinux = 2:12.2.11-0.el7 for package: 2:ceph-radosgw-12.2.11-0.el7.x86_64

[node1][DEBUG ] --> Processing Dependency: mailcap for package: 2:ceph-radosgw-12.2.11-0.el7.x86_64

[node1][DEBUG ] --> Running transaction check

[node1][DEBUG ] ---> Package ceph-mds.x86_64 2:12.2.11-0.el7 will be installed

[node1][DEBUG ] --> Processing Dependency: ceph-base = 2:12.2.11-0.el7 for package: 2:ceph-mds-12.2.11-0.el7.x86_64

[node1][DEBUG ] ---> Package ceph-mgr.x86_64 2:12.2.11-0.el7 will be installed

[node1][DEBUG ] --> Processing Dependency: python-werkzeug for package: 2:ceph-mgr-12.2.11-0.el7.x86_64

[node1][DEBUG ] --> Processing Dependency: python-pecan for package: 2:ceph-mgr-12.2.11-0.el7.x86_64

[node1][DEBUG ] --> Processing Dependency: python-jinja2 for package: 2:ceph-mgr-12.2.11-0.el7.x86_64

[node1][DEBUG ] --> Processing Dependency: python-cherrypy for package: 2:ceph-mgr-12.2.11-0.el7.x86_64

[node1][DEBUG ] --> Processing Dependency: pyOpenSSL for package: 2:ceph-mgr-12.2.11-0.el7.x86_64

[node1][DEBUG ] ---> Package ceph-mon.x86_64 2:12.2.11-0.el7 will be installed

[node1][DEBUG ] --> Processing Dependency: python-flask for package: 2:ceph-mon-12.2.11-0.el7.x86_64

[node1][DEBUG ] ---> Package ceph-osd.x86_64 2:12.2.11-0.el7 will be installed

[node1][DEBUG ] ---> Package ceph-selinux.x86_64 2:12.2.11-0.el7 will be installed

[node1][DEBUG ] ---> Package mailcap.noarch 0:2.1.41-2.el7 will be installed

[node1][DEBUG ] --> Running transaction check

[node1][DEBUG ] ---> Package ceph-base.x86_64 2:12.2.11-0.el7 will be installed

[node1][DEBUG ] ---> Package pyOpenSSL.x86_64 0:0.13.1-4.el7 will be installed

[node1][DEBUG ] ---> Package python-cherrypy.noarch 0:3.2.2-4.el7 will be installed

[node1][DEBUG ] ---> Package python-flask.noarch 1:0.10.1-4.el7 will be installed

[node1][DEBUG ] --> Processing Dependency: python-itsdangerous for package: 1:python-flask-0.10.1-4.el7.noarch

[node1][DEBUG ] ---> Package python-jinja2.noarch 0:2.7.2-3.el7_6 will be installed

[node1][DEBUG ] --> Processing Dependency: python-babel >= 0.8 for package: python-jinja2-2.7.2-3.el7_6.noarch

[node1][DEBUG ] --> Processing Dependency: python-markupsafe for package: python-jinja2-2.7.2-3.el7_6.noarch

[node1][DEBUG ] ---> Package python-werkzeug.noarch 0:0.9.1-2.el7 will be installed

[node1][DEBUG ] ---> Package python2-pecan.noarch 0:1.1.2-1.el7 will be installed

[node1][DEBUG ] --> Processing Dependency: python-webtest for package: python2-pecan-1.1.2-1.el7.noarch

[node1][DEBUG ] --> Processing Dependency: python-webob for package: python2-pecan-1.1.2-1.el7.noarch

[node1][DEBUG ] --> Processing Dependency: python-singledispatch for package: python2-pecan-1.1.2-1.el7.noarch

[node1][DEBUG ] --> Processing Dependency: python-simplegeneric for package: python2-pecan-1.1.2-1.el7.noarch

[node1][DEBUG ] --> Processing Dependency: python-mako for package: python2-pecan-1.1.2-1.el7.noarch

[node1][DEBUG ] --> Processing Dependency: python-logutils for package: python2-pecan-1.1.2-1.el7.noarch

[node1][DEBUG ] --> Running transaction check

[node1][DEBUG ] ---> Package python-babel.noarch 0:0.9.6-8.el7 will be installed

[node1][DEBUG ] ---> Package python-itsdangerous.noarch 0:0.23-2.el7 will be installed

[node1][DEBUG ] ---> Package python-logutils.noarch 0:0.3.3-3.el7 will be installed

[node1][DEBUG ] ---> Package python-mako.noarch 0:0.8.1-2.el7 will be installed

[node1][DEBUG ] --> Processing Dependency: python-beaker for package: python-mako-0.8.1-2.el7.noarch

[node1][DEBUG ] ---> Package python-markupsafe.x86_64 0:0.11-10.el7 will be installed

[node1][DEBUG ] ---> Package python-simplegeneric.noarch 0:0.8-7.el7 will be installed

[node1][DEBUG ] ---> Package python-webob.noarch 0:1.2.3-7.el7 will be installed

[node1][DEBUG ] ---> Package python-webtest.noarch 0:1.3.4-6.el7 will be installed

[node1][DEBUG ] ---> Package python2-singledispatch.noarch 0:3.4.0.3-4.el7 will be installed

[node1][DEBUG ] --> Running transaction check

[node1][DEBUG ] ---> Package python-beaker.noarch 0:1.5.4-10.el7 will be installed

[node1][DEBUG ] --> Processing Dependency: python-paste for package: python-beaker-1.5.4-10.el7.noarch

[node1][DEBUG ] --> Running transaction check

[node1][DEBUG ] ---> Package python-paste.noarch 0:1.7.5.1-9.20111221hg1498.el7 will be installed

[node1][DEBUG ] --> Processing Dependency: python-tempita for package: python-paste-1.7.5.1-9.20111221hg1498.el7.noarch

[node1][DEBUG ] --> Running transaction check

[node1][DEBUG ] ---> Package python-tempita.noarch 0:0.5.1-6.el7 will be installed

[node1][DEBUG ] --> Finished Dependency Resolution

[node1][DEBUG ]

[node1][DEBUG ] Dependencies Resolved

[node1][DEBUG ]

[node1][DEBUG ] ================================================================================

[node1][DEBUG ] Package Arch Version Repository Size

[node1][DEBUG ] ================================================================================

[node1][DEBUG ] Installing:

[node1][DEBUG ] ceph x86_64 2:12.2.11-0.el7 centos-ceph-luminous 2.5 k

[node1][DEBUG ] ceph-radosgw x86_64 2:12.2.11-0.el7 centos-ceph-luminous 4.0 M

[node1][DEBUG ] Installing for dependencies:

[node1][DEBUG ] ceph-base x86_64 2:12.2.11-0.el7 centos-ceph-luminous 4.0 M

[node1][DEBUG ] ceph-mds x86_64 2:12.2.11-0.el7 centos-ceph-luminous 3.7 M

[node1][DEBUG ] ceph-mgr x86_64 2:12.2.11-0.el7 centos-ceph-luminous 3.6 M

[node1][DEBUG ] ceph-mon x86_64 2:12.2.11-0.el7 centos-ceph-luminous 5.1 M

[node1][DEBUG ] ceph-osd x86_64 2:12.2.11-0.el7 centos-ceph-luminous 13 M

[node1][DEBUG ] ceph-selinux x86_64 2:12.2.11-0.el7 centos-ceph-luminous 21 k

[node1][DEBUG ] mailcap noarch 2.1.41-2.el7 base 31 k

[node1][DEBUG ] pyOpenSSL x86_64 0.13.1-4.el7 base 135 k

[node1][DEBUG ] python-babel noarch 0.9.6-8.el7 base 1.4 M

[node1][DEBUG ] python-beaker noarch 1.5.4-10.el7 base 80 k

[node1][DEBUG ] python-cherrypy noarch 3.2.2-4.el7 base 422 k

[node1][DEBUG ] python-flask noarch 1:0.10.1-4.el7 extras 204 k

[node1][DEBUG ] python-itsdangerous noarch 0.23-2.el7 extras 24 k

[node1][DEBUG ] python-jinja2 noarch 2.7.2-3.el7_6 updates 518 k

[node1][DEBUG ] python-logutils noarch 0.3.3-3.el7 centos-ceph-luminous 42 k

[node1][DEBUG ] python-mako noarch 0.8.1-2.el7 base 307 k

[node1][DEBUG ] python-markupsafe x86_64 0.11-10.el7 base 25 k

[node1][DEBUG ] python-paste noarch 1.7.5.1-9.20111221hg1498.el7

[node1][DEBUG ] base 866 k

[node1][DEBUG ] python-simplegeneric noarch 0.8-7.el7 centos-ceph-luminous 12 k

[node1][DEBUG ] python-tempita noarch 0.5.1-6.el7 base 33 k

[node1][DEBUG ] python-webob noarch 1.2.3-7.el7 base 202 k

[node1][DEBUG ] python-webtest noarch 1.3.4-6.el7 base 102 k

[node1][DEBUG ] python-werkzeug noarch 0.9.1-2.el7 extras 562 k

[node1][DEBUG ] python2-pecan noarch 1.1.2-1.el7 centos-ceph-luminous 268 k

[node1][DEBUG ] python2-singledispatch noarch 3.4.0.3-4.el7 centos-ceph-luminous 18 k

[node1][DEBUG ]

[node1][DEBUG ] Transaction Summary

[node1][DEBUG ] ================================================================================

[node1][DEBUG ] Install 2 Packages (+25 Dependent packages)

[node1][DEBUG ]

[node1][DEBUG ] Total download size: 38 M

[node1][DEBUG ] Installed size: 137 M

[node1][DEBUG ] Downloading packages:

[node1][DEBUG ] --------------------------------------------------------------------------------

[node1][DEBUG ] Total 179 kB/s | 38 MB 03:39

[node1][DEBUG ] Running transaction check

[node1][DEBUG ] Running transaction test

[node1][DEBUG ] Transaction test succeeded

[node1][DEBUG ] Running transaction

[node1][DEBUG ] Installing : 2:ceph-base-12.2.11-0.el7.x86_64 1/27

[node1][DEBUG ] Installing : 2:ceph-selinux-12.2.11-0.el7.x86_64 2/27

[node1][DEBUG ] Installing : pyOpenSSL-0.13.1-4.el7.x86_64 3/27

[node1][DEBUG ] Installing : python-webob-1.2.3-7.el7.noarch 4/27

[node1][DEBUG ] Installing : python-markupsafe-0.11-10.el7.x86_64 5/27

[node1][DEBUG ] Installing : python-werkzeug-0.9.1-2.el7.noarch 6/27

[node1][DEBUG ] Installing : python-webtest-1.3.4-6.el7.noarch 7/27

[node1][DEBUG ] Installing : 2:ceph-mds-12.2.11-0.el7.x86_64 8/27

[node1][DEBUG ] Installing : 2:ceph-osd-12.2.11-0.el7.x86_64 9/27

[node1][DEBUG ] Installing : python-tempita-0.5.1-6.el7.noarch 10/27

[node1][DEBUG ] Installing : python-paste-1.7.5.1-9.20111221hg1498.el7.noarch 11/27

[node1][DEBUG ] Installing : python-beaker-1.5.4-10.el7.noarch 12/27

[node1][DEBUG ] Installing : python-mako-0.8.1-2.el7.noarch 13/27

[node1][DEBUG ] Installing : python-cherrypy-3.2.2-4.el7.noarch 14/27

[node1][DEBUG ] Installing : python-babel-0.9.6-8.el7.noarch 15/27

[node1][DEBUG ] Installing : python-jinja2-2.7.2-3.el7_6.noarch 16/27

[node1][DEBUG ] Installing : python-itsdangerous-0.23-2.el7.noarch 17/27

[node1][DEBUG ] Installing : 1:python-flask-0.10.1-4.el7.noarch 18/27

[node1][DEBUG ] Installing : 2:ceph-mon-12.2.11-0.el7.x86_64 19/27

[node1][DEBUG ] Installing : python-logutils-0.3.3-3.el7.noarch 20/27

[node1][DEBUG ] Installing : mailcap-2.1.41-2.el7.noarch 21/27

[node1][DEBUG ] Installing : python2-singledispatch-3.4.0.3-4.el7.noarch 22/27

[node1][DEBUG ] Installing : python-simplegeneric-0.8-7.el7.noarch 23/27

[node1][DEBUG ] Installing : python2-pecan-1.1.2-1.el7.noarch 24/27

[node1][DEBUG ] Installing : 2:ceph-mgr-12.2.11-0.el7.x86_64 25/27

[node1][DEBUG ] Installing : 2:ceph-12.2.11-0.el7.x86_64 26/27

[node1][DEBUG ] Installing : 2:ceph-radosgw-12.2.11-0.el7.x86_64 27/27

[node1][DEBUG ] Verifying : 1:python-flask-0.10.1-4.el7.noarch 1/27

[node1][DEBUG ] Verifying : python-simplegeneric-0.8-7.el7.noarch 2/27

[node1][DEBUG ] Verifying : python2-singledispatch-3.4.0.3-4.el7.noarch 3/27

[node1][DEBUG ] Verifying : mailcap-2.1.41-2.el7.noarch 4/27

[node1][DEBUG ] Verifying : 2:ceph-mon-12.2.11-0.el7.x86_64 5/27

[node1][DEBUG ] Verifying : python-logutils-0.3.3-3.el7.noarch 6/27

[node1][DEBUG ] Verifying : python-mako-0.8.1-2.el7.noarch 7/27

[node1][DEBUG ] Verifying : 2:ceph-12.2.11-0.el7.x86_64 8/27

[node1][DEBUG ] Verifying : python-beaker-1.5.4-10.el7.noarch 9/27

[node1][DEBUG ] Verifying : python-itsdangerous-0.23-2.el7.noarch 10/27

[node1][DEBUG ] Verifying : python-jinja2-2.7.2-3.el7_6.noarch 11/27

[node1][DEBUG ] Verifying : 2:ceph-mds-12.2.11-0.el7.x86_64 12/27

[node1][DEBUG ] Verifying : python-werkzeug-0.9.1-2.el7.noarch 13/27

[node1][DEBUG ] Verifying : python-markupsafe-0.11-10.el7.x86_64 14/27

[node1][DEBUG ] Verifying : python2-pecan-1.1.2-1.el7.noarch 15/27

[node1][DEBUG ] Verifying : python-babel-0.9.6-8.el7.noarch 16/27

[node1][DEBUG ] Verifying : python-paste-1.7.5.1-9.20111221hg1498.el7.noarch 17/27

[node1][DEBUG ] Verifying : python-webob-1.2.3-7.el7.noarch 18/27

[node1][DEBUG ] Verifying : pyOpenSSL-0.13.1-4.el7.x86_64 19/27

[node1][DEBUG ] Verifying : 2:ceph-base-12.2.11-0.el7.x86_64 20/27

[node1][DEBUG ] Verifying : python-cherrypy-3.2.2-4.el7.noarch 21/27

[node1][DEBUG ] Verifying : 2:ceph-mgr-12.2.11-0.el7.x86_64 22/27

[node1][DEBUG ] Verifying : python-tempita-0.5.1-6.el7.noarch 23/27

[node1][DEBUG ] Verifying : 2:ceph-osd-12.2.11-0.el7.x86_64 24/27

[node1][DEBUG ] Verifying : python-webtest-1.3.4-6.el7.noarch 25/27

[node1][DEBUG ] Verifying : 2:ceph-radosgw-12.2.11-0.el7.x86_64 26/27

[node1][DEBUG ] Verifying : 2:ceph-selinux-12.2.11-0.el7.x86_64 27/27

[node1][DEBUG ]

[node1][DEBUG ] Installed:

[node1][DEBUG ] ceph.x86_64 2:12.2.11-0.el7 ceph-radosgw.x86_64 2:12.2.11-0.el7

[node1][DEBUG ]

[node1][DEBUG ] Dependency Installed:

[node1][DEBUG ] ceph-base.x86_64 2:12.2.11-0.el7

[node1][DEBUG ] ceph-mds.x86_64 2:12.2.11-0.el7

[node1][DEBUG ] ceph-mgr.x86_64 2:12.2.11-0.el7

[node1][DEBUG ] ceph-mon.x86_64 2:12.2.11-0.el7

[node1][DEBUG ] ceph-osd.x86_64 2:12.2.11-0.el7

[node1][DEBUG ] ceph-selinux.x86_64 2:12.2.11-0.el7

[node1][DEBUG ] mailcap.noarch 0:2.1.41-2.el7

[node1][DEBUG ] pyOpenSSL.x86_64 0:0.13.1-4.el7

[node1][DEBUG ] python-babel.noarch 0:0.9.6-8.el7

[node1][DEBUG ] python-beaker.noarch 0:1.5.4-10.el7

[node1][DEBUG ] python-cherrypy.noarch 0:3.2.2-4.el7

[node1][DEBUG ] python-flask.noarch 1:0.10.1-4.el7

[node1][DEBUG ] python-itsdangerous.noarch 0:0.23-2.el7

[node1][DEBUG ] python-jinja2.noarch 0:2.7.2-3.el7_6

[node1][DEBUG ] python-logutils.noarch 0:0.3.3-3.el7

[node1][DEBUG ] python-mako.noarch 0:0.8.1-2.el7

[node1][DEBUG ] python-markupsafe.x86_64 0:0.11-10.el7

[node1][DEBUG ] python-paste.noarch 0:1.7.5.1-9.20111221hg1498.el7

[node1][DEBUG ] python-simplegeneric.noarch 0:0.8-7.el7

[node1][DEBUG ] python-tempita.noarch 0:0.5.1-6.el7

[node1][DEBUG ] python-webob.noarch 0:1.2.3-7.el7

[node1][DEBUG ] python-webtest.noarch 0:1.3.4-6.el7

[node1][DEBUG ] python-werkzeug.noarch 0:0.9.1-2.el7

[node1][DEBUG ] python2-pecan.noarch 0:1.1.2-1.el7

[node1][DEBUG ] python2-singledispatch.noarch 0:3.4.0.3-4.el7

[node1][DEBUG ]

[node1][DEBUG ] Complete!

[node1][INFO ] Running command: sudo ceph --version

[node1][DEBUG ] ceph version 12.2.11 (26dc3775efc7bb286a1d6d66faee0ba30ea23eee) luminous (stable)

[ceph_deploy.install][DEBUG ] Detecting platform for host node2 ...

[node2][DEBUG ] connection detected need for sudo

...

[node2][DEBUG ] Complete!

[node2][INFO ] Running command: sudo ceph --version

[node2][DEBUG ] ceph version 12.2.11 (26dc3775efc7bb286a1d6d66faee0ba30ea23eee) luminous (stable)

[ceph_deploy.install][DEBUG ] Detecting platform for host node3 ...

[node3][DEBUG ] connection detected need for sudo

...

[node3][DEBUG ] Complete!

[node3][INFO ] Running command: sudo ceph --version

[node3][DEBUG ] ceph version 12.2.11 (26dc3775efc7bb286a1d6d66faee0ba30ea23eee) luminous (stable)

yjiang2@admin-node:~$5. 配置初始 monitor(s)、并收集所有密钥

$ ceph-deploy mon create-initial完成上述操作后,当前目录里应该会出现这些密钥环:

{cluster-name}.client.admin.keyring

{cluster-name}.bootstrap-osd.keyring

{cluster-name}.bootstrap-mds.keyring

{cluster-name}.bootstrap-rgw.keyring6. 添加2个OSD

1)SSH 登录到 Ceph 节点(node2/node3)、并给 OSD 守护进程创建一个目录和添加权限。或者直接用磁盘的方式。

注意:node2 创建的是osd0,node3创建的是osd1

$ ssh node2

$ sudo mkdir /var/local/osd0

$ sudo chmod 777 /var/local/osd0/

$ exit

$ ssh node3

$ sudo mkdir /var/local/osd1

$ sudo chmod 777 /var/local/osd1/

$ exit用磁盘方式:

命令格式:ceph-deploy disk zap {osd-server-name}:{disk-name},该命令擦除磁盘的分区表及其内容。

例如:ceph-deploy disk zap node1:sdb

2) 然后,从管理节点(admin-node)执行 ceph-deploy 来准备 OSD 。

$ ceph-deploy osd prepare node2:/var/local/osd0 node3:/var/local/osd1用磁盘方式:

命令格式:ceph-deploy osd prepare {node-name}:{data-disk}

例如:ceph-deploy osd prepare node1:sdb1:sdc

3) 最后,激活 OSD 。

$ ceph-deploy osd activate node2:/var/local/osd0 node3:/var/local/osd1用磁盘方式:

ceph-deploy osd activate {node-name}:{data-disk-name}

例如:ceph-deploy osd activate node1:sdb1:sdc

7.把配置文件和 admin 密钥拷贝到管理节点(admin-node)和 Ceph 节点(node1/2/3)的/etc/ceph/目录下

$ ceph-deploy admin admin-node node1 node2 node3如果提示[ERROR] exists with different content

[ceph_deploy.admin][ERROR ] RuntimeError: config file /etc/ceph/ceph.conf exists with different content; use --overwrite-conf to overwrite使用 --overwrite-conf 选项:

$ ceph-deploy --overwrite-conf admin admin-node node1 node2 node38. 确保你对 ceph.client.admin.keyring 有正确的操作权限

$ sudo chmod +r /etc/ceph/ceph.client.admin.keyring9. 在node1上创建mgr节点

luminous版本增加了mgr 节点,运行命令ceph-deploy mgr create node1创建。如果没有创建mgr节点,运行ceph health会提示“HEALTH_WARN no active mgr”

$ ceph health

HEALTH_WARN no active mgr10. 检查集群的健康状况和OSD节点状况

ps -axu|grep ceph 检查各个node的进程:

//Monitor

ceph 4111 0.0 2.3 406772 35944 ? Ssl 21:07 0:00 /usr/bin/ceph-mon -f --cluster ceph --id node1 --setuser ceph --setgroup ceph

ceph 7931 0.3 3.4 640680 53132 ? Ssl 02:28 0:12 /usr/bin/ceph-mgr -f --cluster ceph --id node1 --setuser ceph --setgroup ceph

//OSD0

ceph 14452 0.2 4.6 798004 39660 ? Ssl 21:19 0:01 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

//OSD1

ceph 14555 0.2 2.7 798004 41492 ? Ssl Jun12 0:39 /usr/bin/ceph-osd -f --cluster ceph --id 1 --setuser ceph --setgroup ceph运行ceph -s查看集群状态。

yjiang2@admin-node:~$ ceph health

HEALTH_OK

yjiang2@admin-node:~$ ceph -s

cluster:

id: 027d5b3c-e011-4a92-9449-c8755cd8f500

health: HEALTH_OK

services:

mon: 1 daemons, quorum node1

mgr: node1(active)

osd: 2 osds: 2 up, 2 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0B

usage: 2.00GiB used, 18.0GiB / 20GiB avail

pgs: