豆子最近开始看EKS的使用。EKS是AWS托管的k8s服务,使用起来和baremetal的k8s还是有些区别的。下面是一个简单的学习测试,看看如何在AWS上面使用他自己的ALB Ingress Controller。

在裸机的k8s上面,我们一般是通过Nginx Ingress Controller来进行第7层的负载均衡。 AWS上面我们当然也可以这么做,然后再通过一个第四层的 NLB service来访问 Nginx Ingress Controller这个pod。但是,AWS本身的ALB就是一个七层代理,因此我们可以直接通过ALB controller来进行配置。

基本的配置顺序如下

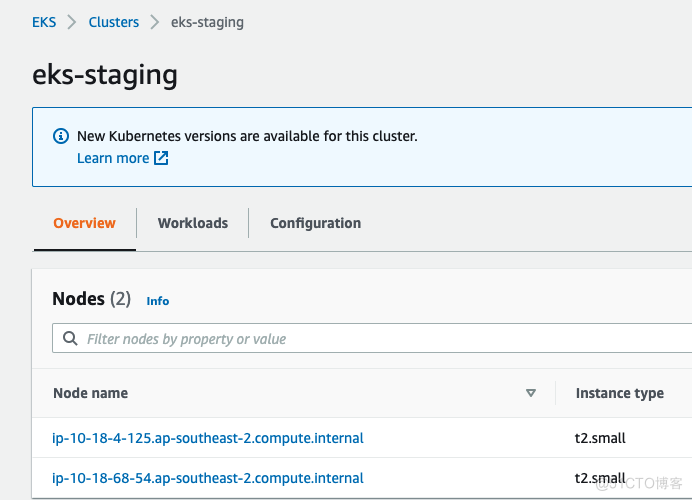

1.搭建一个EKS 集群

这一步有很多方式可以实现,比如通过管理界面,通过eksctl或者terraform code

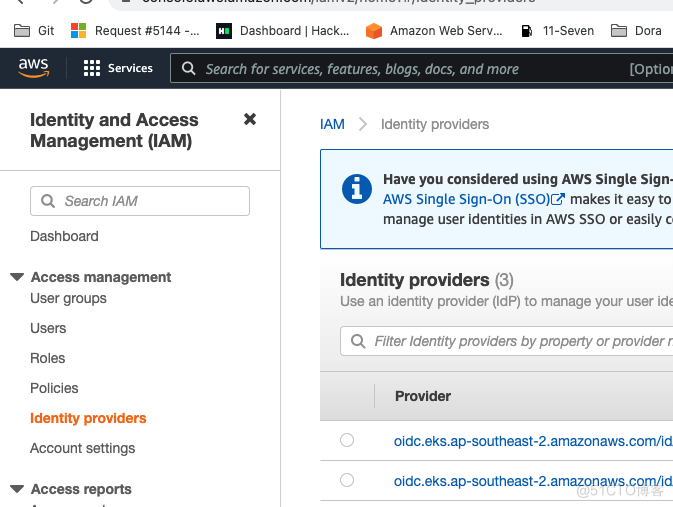

2. IAM 添加 OpenIP provider

这一步的作用是把eks 集群的open connetion id添加到IAM里面,这样后面创建role的时候才可以添加信任对象

https://docs.aws.amazon.com/eks/latest/userguide/enable-iam-roles-for-service-accounts.html

3. 配置AWS load balancer controller

这一步是最关键的,我们需要创建IAM policy,IAM role,service account和 ALB controller 的 部署。如果这一步出现了问题,那么后面创建Ingress的时候他没法自动创建ALB

https://docs.aws.amazon.com/eks/latest/userguide/aws-load-balancer-controller.html

创建好的效果

yuan@yuan-VirtualBox:~$ kubectl describe pod aws-load-balancer-controller-c66bd8cc5-24hlw -n kube-system

Name: aws-load-balancer-controller-c66bd8cc5-24hlw

Namespace: kube-system

Priority: 2000000000

Priority Class Name: system-cluster-critical

Node: ip-10-18-68-54.ap-southeast-2.compute.internal/10.18.68.54

Start Time: Sun, 23 Jan 2022 23:13:53 +1100

Labels: app.kubernetes.io/component=controller

app.kubernetes.io/name=aws-load-balancer-controller

pod-template-hash=c66bd8cc5

Annotations: kubernetes.io/psp: eks.privileged

Status: Running

IP: 10.18.68.26

IPs:

IP: 10.18.68.26

Controlled By: ReplicaSet/aws-load-balancer-controller-c66bd8cc5

Containers:

controller:

Container ID: docker://36d12f699ca8d4821764c8e45d6d3c3dbf59db88547a40a809ccecb4d2d5abe5

Image: amazon/aws-alb-ingress-controller:v2.3.1

Image ID: docker-pullable://amazon/aws-alb-ingress-controller@sha256:e725a110e69af26881836a179250cd7bb418e40ecfce910ac309bfc69c80f7a4

Port: 9443/TCP

Host Port: 0/TCP

Args:

--cluster-name=eks-staging

--ingress-class=alb

--aws-vpc-id=vpc-3efe1e5a

--aws-region=ap-southeast-2

State: Running

Started: Sun, 23 Jan 2022 23:14:05 +1100

Ready: True

Restart Count: 0

Limits:

cpu: 200m

memory: 500Mi

Requests:

cpu: 100m

memory: 200Mi

Liveness: http-get http://:61779/healthz delay=30s timeout=10s period=10s #success=1 #failure=2

Environment:

AWS_DEFAULT_REGION: ap-southeast-2

AWS_REGION: ap-southeast-2

AWS_ROLE_ARN: arn:aws:iam::065816533466:role/eksctl-eks-staging-addon-iamserviceaccount-k-Role1-YZY1DPHHQH28

AWS_WEB_IDENTITY_TOKEN_FILE: /var/run/secrets/eks.amazonaws.com/serviceaccount/token

Mounts:

/tmp/k8s-webhook-server/serving-certs from cert (ro)

/var/run/secrets/eks.amazonaws.com/serviceaccount from aws-iam-token (ro)

/var/run/secrets/kubernetes.io/serviceaccount from aws-load-balancer-controller-token-wwjn6 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

aws-iam-token:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 86400

cert:

Type: Secret (a volume populated by a Secret)

SecretName: aws-load-balancer-webhook-tls

Optional: false

aws-load-balancer-controller-token-wwjn6:

Type: Secret (a volume populated by a Secret)

SecretName: aws-load-balancer-controller-token-wwjn6

Optional: false

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events: <none>

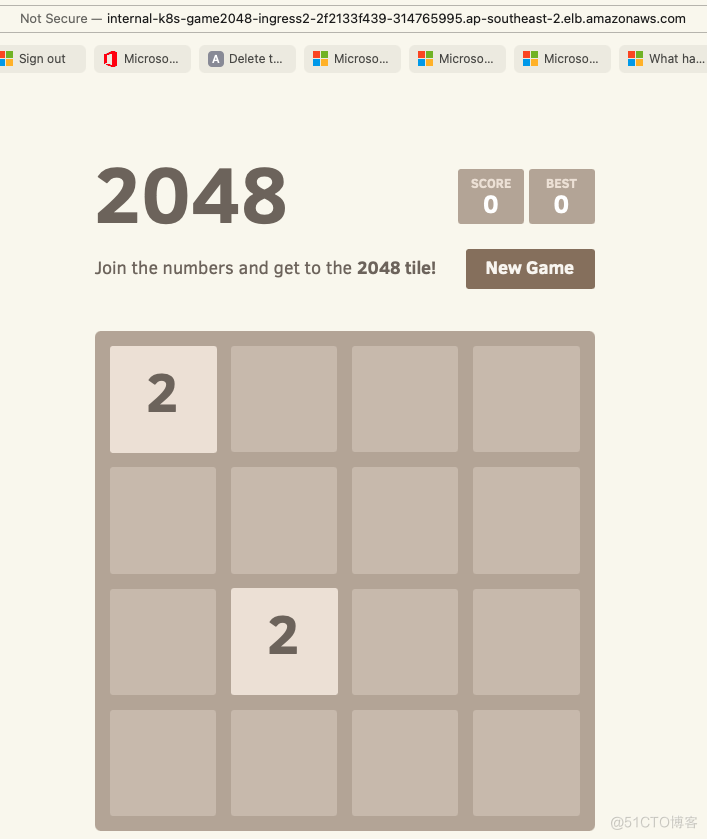

4. 配置一个测试的sample app

https://docs.aws.amazon.com/eks/ltatest/userguide/alb-ingress.html

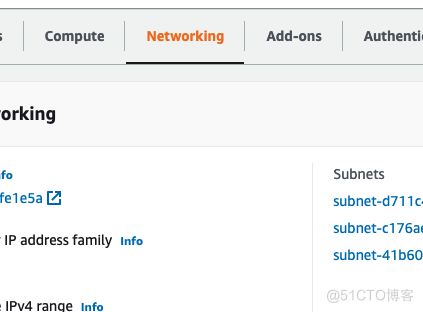

豆子的测试环境是配置了三个private subnet,因此我的ingress的配置文件指定scheme是使用的internal而不是internet-facing

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

namespace: game-2048

name: ingress-2048

annotations:

kubernetes.io/ingress.class: alb

alb.ingress.kubernetes.io/scheme: internal

alb.ingress.kubernetes.io/target-type: ip

spec:

如果配置成功 我们可以获得address的url,如果是空的话,那就需要看看日志是哪里出错了

yuan@yuan-VirtualBox:~$ kubectl get ingress -A

Warning: extensions/v1beta1 Ingress is deprecated in v1.14+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

NAMESPACE NAME CLASS HOSTS ADDRESS PORTS AGE

game-2048 ingress-2048 <none> * internal-k8s-game2048-ingress2-2f2133f439-314765995.ap-southeast-2.elb.amazonaws.com 80 8s

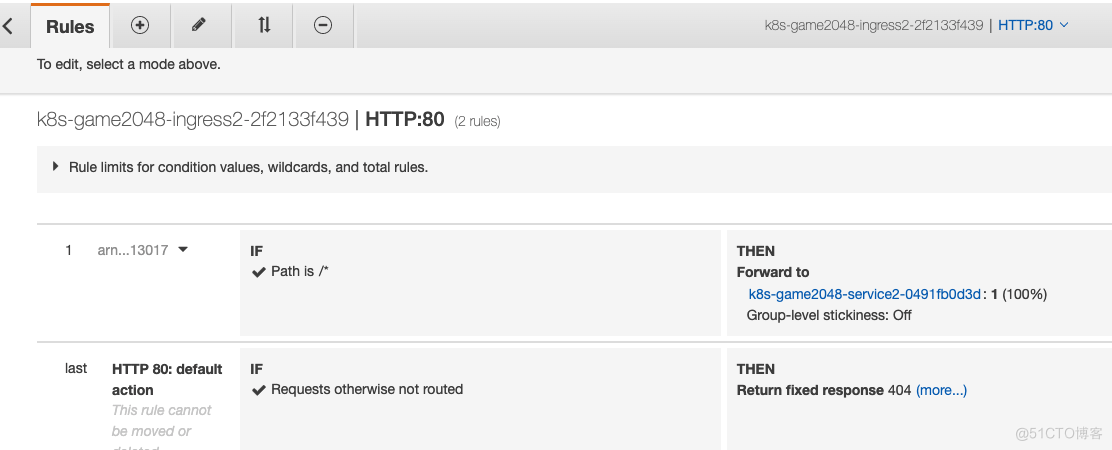

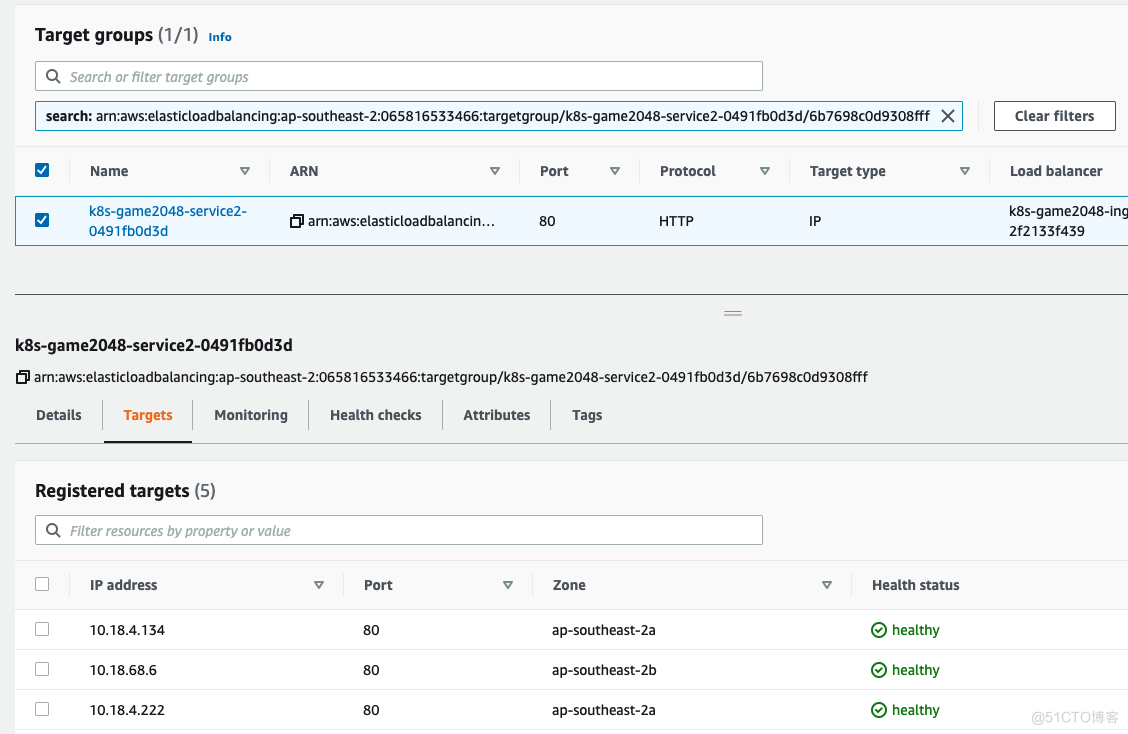

效果如下

如果查看AWS的控制台界面,效果是ALB后面的target group直接把我们的pod的IP都加进去了

5.排错

豆子在配置过程中,出现了一个问题,当我配置了ingress之后,ALB无法自动检测到eks所在的subnet。如果查看ingress controller pod 的日志,会发现下面的报错

{"level":"error","ts":1642939080.3030522,"logger":"controller-runtime.manager.controller.ingress","msg":"Reconciler error","name":"ingress-2048","namespace":"game-2048","error":"couldn't auto-discover subnets: unable to discover at least one subnet"}

{"level":"error","ts":1642939091.1972742,"logger":"controller-runtime.manager.controller.ingress","msg":"Reconciler error","name":"ingress-2048","namespace":"game-2048","error":"couldn't auto-discover subnets: unable to discover at least one subnet"}

{"level":"error","ts":1642939112.3932755,"logger":"controller-runtime.manager.controller.ingress","msg":"Reconciler error","name":"ingress-2048","namespace":"game-2048","error":"couldn't auto-discover subnets: unable to discover at least one subnet"}

解决方法是给subnet添加对应的标签 https://docs.aws.amazon.com/eks/latest/userguide/alb-ingress.html 如果是通过eksctl创建的subnet,那么自动已经打好标签了,我是因为使用现有的subnet,所以这些标签没有加上,需要手动添加。打标签的时候,如果是直接从网页复制粘贴,可能无意会粘贴上换行符,导致失败,比如我就在这里卡了半天,最后才发现是多了一个\t的换行符

aws ec2 describe-subnets --subnet-ids subnet-d711c48e --region ap-southeast-2

{

"Subnets": [

{

"AvailabilityZone": "ap-southeast-2c",

"AvailabilityZoneId": "apse2-az2",

"AvailableIpAddressCount": 228,

"CidrBlock": "10.18.132.0/24",

"DefaultForAz": false,

"MapPublicIpOnLaunch": false,

"MapCustomerOwnedIpOnLaunch": false,

"State": "available",

"SubnetId": "subnet-d711c48e",

"VpcId": "vpc-3efe1e5a",

"OwnerId": "065816533466",

"AssignIpv6AddressOnCreation": false,

"Ipv6CidrBlockAssociationSet": [

{

"AssociationId": "subnet-cidr-assoc-01c61166d19311fe1",

"Ipv6CidrBlock": "2406:da1c:a6b:8a84::/64",

"Ipv6CidrBlockState": {

"State": "associated"

}

}

],

"Tags": [

{

"Key": "Tf",

"Value": "1"

},

{

"Key": "Subnet_Class",

"Value": "snet"

},

{

"Key": "Environment",

"Value": "staging"

},

{

"Key": "Name",

"Value": "staging-snet-ap-southeast-2c"

},

{

"Key": "kubernetes.io/cluster/eks-staging",

"Value": "shared"

},

{

"Key": "kubernetes.io/role/internal-elb\t",

"Value": "1"

}

],

"SubnetArn": "arn:aws:ec2:ap-southeast-2:065816533466:subnet/subnet-d711c48e"

}

]

}